| 2018 | 2017 | 2016 | 2015 | 2014 | 2013 | 2012 | 2011 | 2010 | 2009 | 2008 | 2007 | 2006 | 2005 | 2004 | 2004 > |

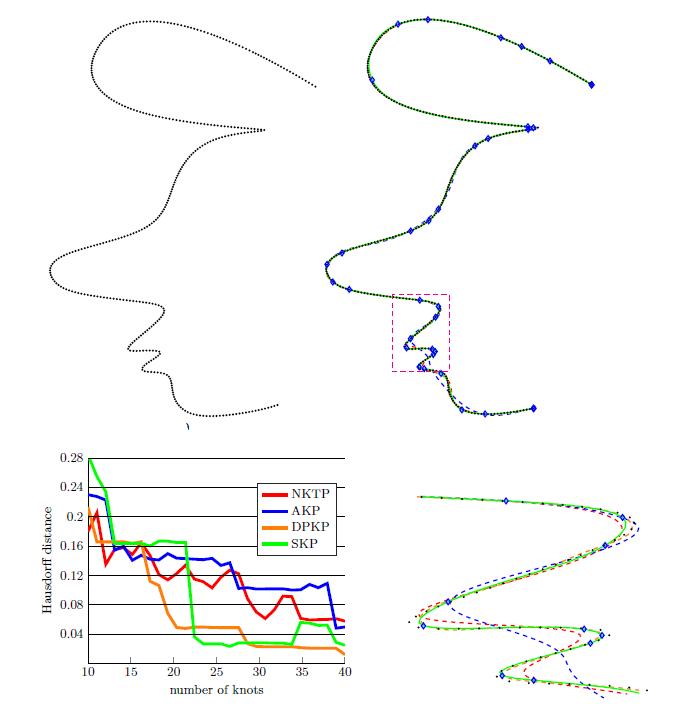

Learnt knot placement in B-spline curve approximation using support vector machines

In: Computer Aided Geometric Design, 62: 104-116, 2018.

Knot placement for curve

approximation is a well known and yet open problem in

geometric modeling. Selecting knot values that yield good

approximations is a challenging task, based largely on

heuristics and user experience. More advanced approaches

range from parametric averaging to genetic algorithms.

In this paper, we propose to use Support Vector Machines (SVMs) to determine suitable knot vectors for B-spline curve approximation. The SVMs are trained to identify locations in a sequential point cloud where knot placement will improve the approximation error. After the training phase, the SVM can assign, to each point set location, a so-called score. This score is based on geometric and differential geometric features of points. It measures the quality of each location to be used as knots in the subsequent approximation. From these scores, the final knot vector can be constructed exploring the topography of the score vector without the need for iteration or optimization in the approximation process. Knot vectors computed with our approach outperform state of the art methods and yield tighter approximations.

In this paper, we propose to use Support Vector Machines (SVMs) to determine suitable knot vectors for B-spline curve approximation. The SVMs are trained to identify locations in a sequential point cloud where knot placement will improve the approximation error. After the training phase, the SVM can assign, to each point set location, a so-called score. This score is based on geometric and differential geometric features of points. It measures the quality of each location to be used as knots in the subsequent approximation. From these scores, the final knot vector can be constructed exploring the topography of the score vector without the need for iteration or optimization in the approximation process. Knot vectors computed with our approach outperform state of the art methods and yield tighter approximations.

Available formats: pdf

Citation: BibTeX

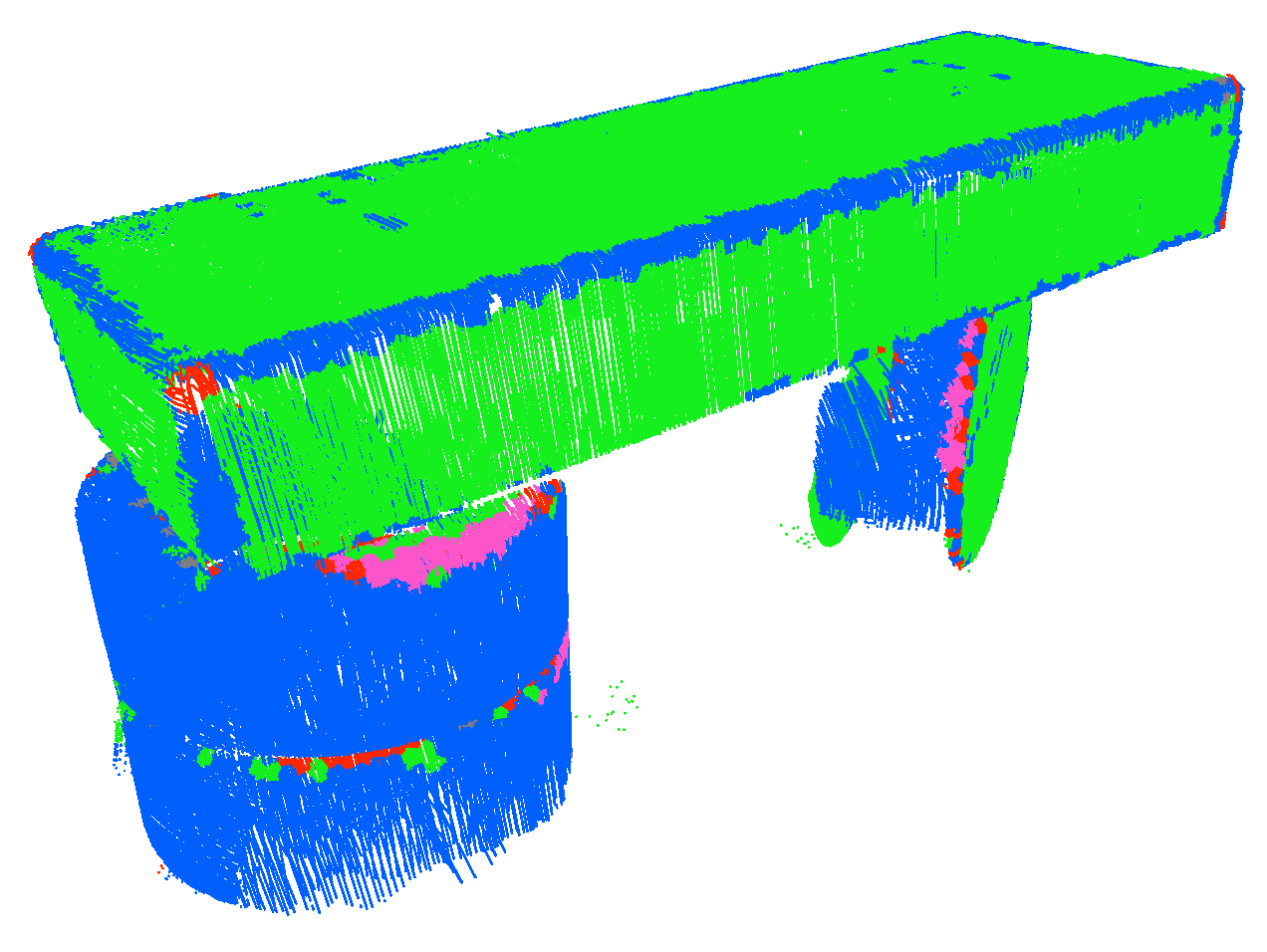

Evaluation of Features for SVM-based Classification of Geometric Primitives in Point Clouds

Laube, Franz, Umlauf

In: 15th IAPR International Conference on Machine Vision Applications (MVA), 15, 2017.

In the reverse engineering process one has to classify parts of point clouds with the correct type of geometric primitive. Features based on different geometric properties like point relations, normals, and curvature information can be used to train classifiers like Support Vector Machines (SVM). These geometric features are estimated in the local neighborhood of a point of the point cloud. The multitude of different features makes an in-depth comparison necessary. In this work we evaluate 23 features for the classification of geometric primitives in point clouds. Their performance is evaluated on SVMs when used to classify geometric primitives in simulated and real laser scanned point clouds. We also introduce a normalization of point cloud density to improve classification generalization.

In: 15th IAPR International Conference on Machine Vision Applications (MVA), 15, 2017.

In the reverse engineering process one has to classify parts of point clouds with the correct type of geometric primitive. Features based on different geometric properties like point relations, normals, and curvature information can be used to train classifiers like Support Vector Machines (SVM). These geometric features are estimated in the local neighborhood of a point of the point cloud. The multitude of different features makes an in-depth comparison necessary. In this work we evaluate 23 features for the classification of geometric primitives in point clouds. Their performance is evaluated on SVMs when used to classify geometric primitives in simulated and real laser scanned point clouds. We also introduce a normalization of point cloud density to improve classification generalization.

Available formats: pdf

Citation: BibTeX

Radiometric calibration of digital cameras using neural networks

Grunwald, Laube, Schall, Umlauf, Franz

In: Conference: Optics and Photonics for Information Processing XI.

Digital cameras ar

e used

in a large variety of scientific and industrial

applications. For most applications, the acquired data

should represent the real light intensity per pixel as

accurately as possible. However, digital cameras are

subject to physical, electronic and optical effects that

lead to errors and noise in the raw image.

Temperature-dependent dark current, read noise, optical

vignetting or different sensitivities of individual

pixels are examples of such effects. The purpose of

radiometric calibration is to improve the quality of the

resulting images by reducing the influence of the

various types of errors on the measured data and

thus improving the quality of the overall

application. In this context, we present a specialized

neural network architecture for radiometric calibration

of digital cameras. Neural networks are used to learn a

temperature- and exposure-dependent mapping from

observed gray-scale values to true light intensities for

each pixel. In contrast to classical flat-fielding,

neural networks have the potential to model nonlinear

mappings which allows for accurately capturing the

temperature dependence of the dark current and for

modeling cameras with nonlinear sensitivities. Both

scenarios are highly relevant in industrial

applications. The experimental comparison of our network

approach to classical flat-fielding shows a consistently

higher reconstruction quality, also for linear cameras.

In addition, the calibration is faster than previous

machine learning approaches based on Gaussian processes.

e used

in a large variety of scientific and industrial

applications. For most applications, the acquired data

should represent the real light intensity per pixel as

accurately as possible. However, digital cameras are

subject to physical, electronic and optical effects that

lead to errors and noise in the raw image.

Temperature-dependent dark current, read noise, optical

vignetting or different sensitivities of individual

pixels are examples of such effects. The purpose of

radiometric calibration is to improve the quality of the

resulting images by reducing the influence of the

various types of errors on the measured data and

thus improving the quality of the overall

application. In this context, we present a specialized

neural network architecture for radiometric calibration

of digital cameras. Neural networks are used to learn a

temperature- and exposure-dependent mapping from

observed gray-scale values to true light intensities for

each pixel. In contrast to classical flat-fielding,

neural networks have the potential to model nonlinear

mappings which allows for accurately capturing the

temperature dependence of the dark current and for

modeling cameras with nonlinear sensitivities. Both

scenarios are highly relevant in industrial

applications. The experimental comparison of our network

approach to classical flat-fielding shows a consistently

higher reconstruction quality, also for linear cameras.

In addition, the calibration is faster than previous

machine learning approaches based on Gaussian processes.Citation: BibTeX

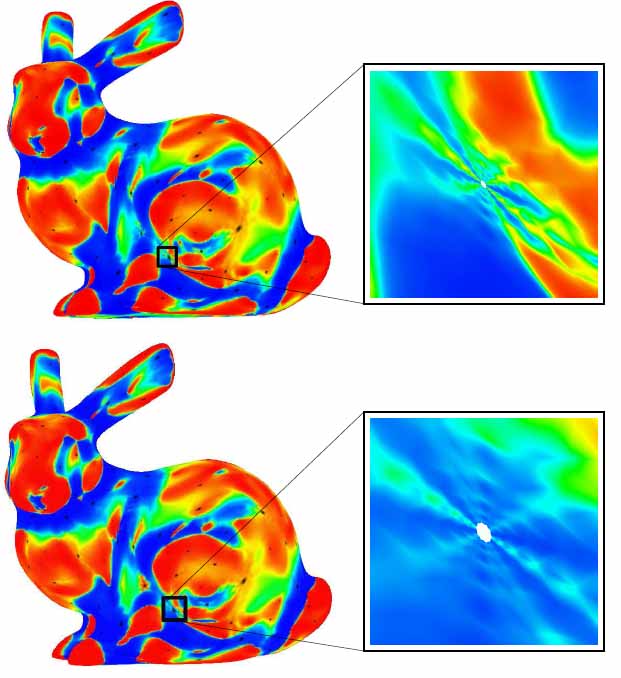

A short survey on recent methods for cage computation

Laube, Umlauf

In: BW-CAR| SINCOM, pp. 37, 2016.

Creating cages that enclose

a 3D-model of some sort is part of many preprocessing

pipelines in computational geometry. Creating a cage

of preferably lower resolution than the original model

is of special interest when performing an operation on

the original model might be to costly. The desired

operation can be applied to the cage first and then

transferred to the enclosed model. With this paper the

authors present a short survey of recent and well

known methods for cage computation. The authors would

like to give the reader an insight in common methods

and their differences.

Available formats: pdf

Citation: BibTeX

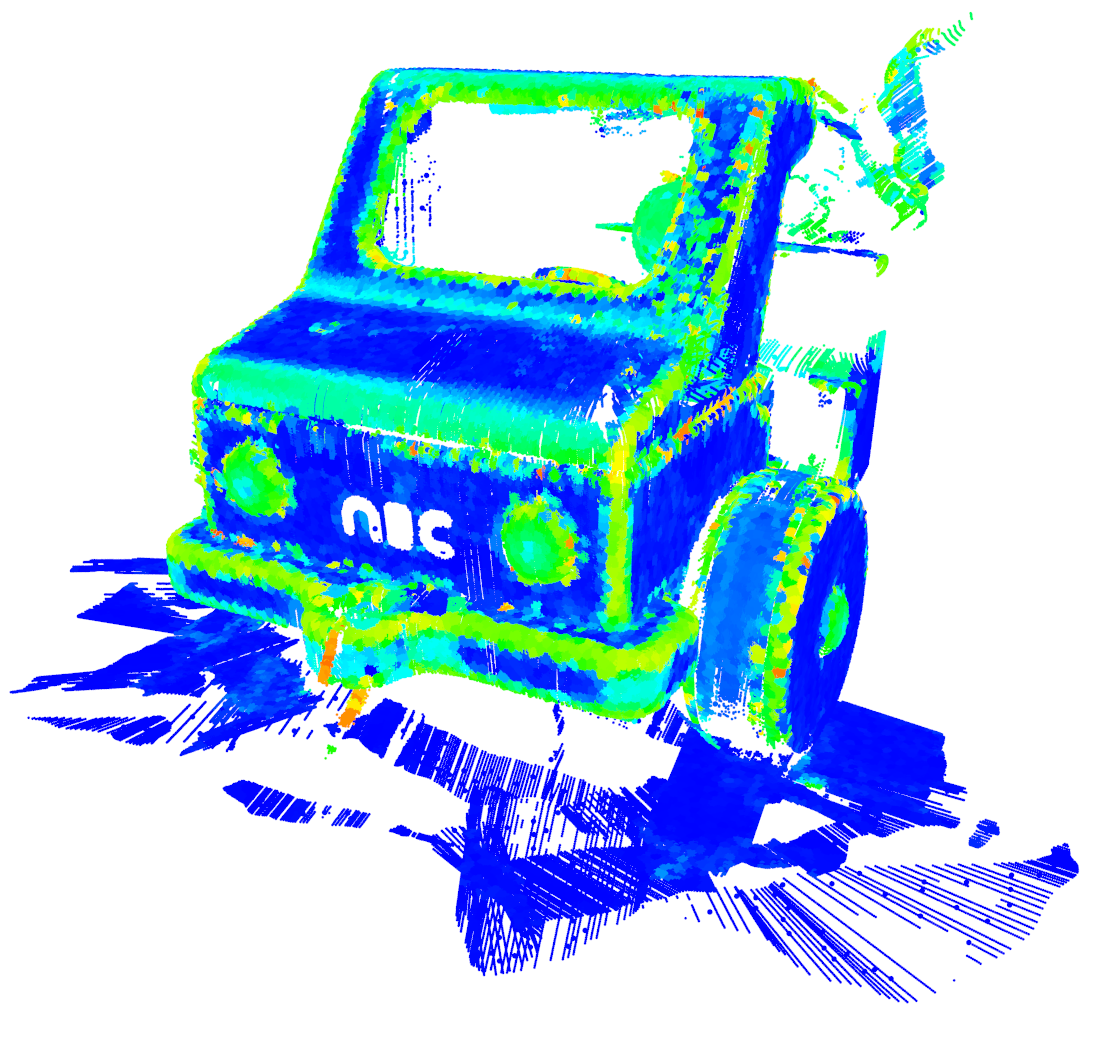

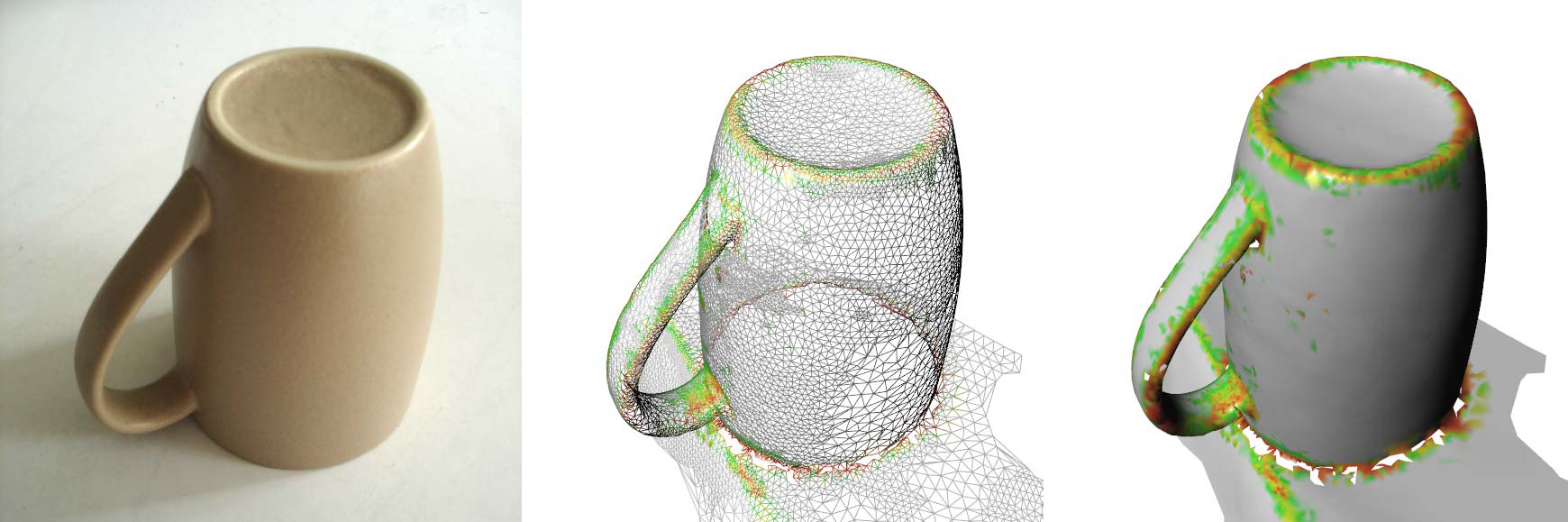

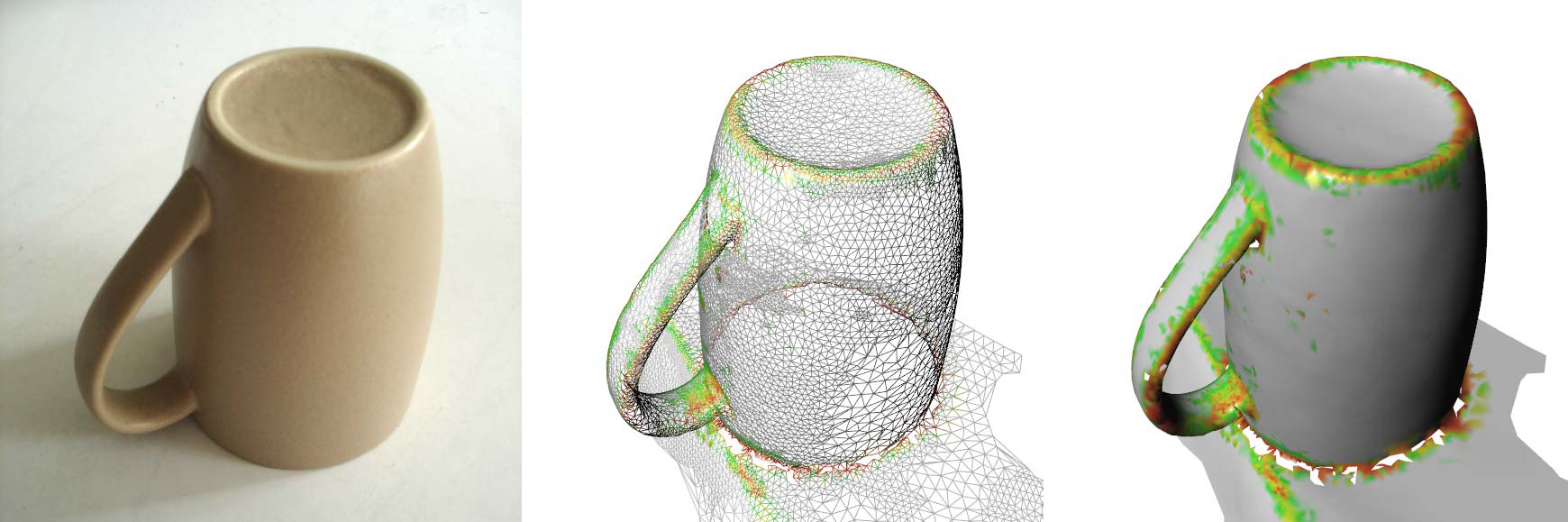

Support vector machines for classification of geometric primitives in point clouds

Caputo, Denker, Franz, Laube, Umlauf

In: Boissonnat, et al. (eds): Curves and Surfaces, Springer, 80-95, 2015.

Classification of point clouds by

different types of geometric primitives is an

essential part in the reconstruction process of CAD

geometry. We use support vector machines (SVM) to

label patches in point clouds with the class labels

tori, ellipsoids, spheres, cones, cylinders or planes.

For the classification, features based on different

geometric properties like point normals, angles, and

principal curvatures are used. These geometric

features are estimated in the local neighborhood of a

point of the point cloud. Computing these geometric

features for a random subset of the point cloud yields

a feature distribution. Different features are

combined for achieving best classification results. To

minimize the time consuming training phase of SVMs,

the geometric features are first evaluated using

linear discriminant analysis (LDA).

LDA and SVM are machine learning approaches that require an initial training phase to allow for a subsequent automatic classification of a new data set. For the training phase point clouds are generated using a simulation of a laser scanning device. Additional noise based on an laser scanner error model is added to the point clouds. The resulting LDA and SVM classifiers are then used to classify geometric primitives in simulated and real laser scanned point clouds. Compared to other approaches, where all known features are used for classification, we explicitly compare novel against known geometric features to prove their effectiveness.

Available formats: pdfLDA and SVM are machine learning approaches that require an initial training phase to allow for a subsequent automatic classification of a new data set. For the training phase point clouds are generated using a simulation of a laser scanning device. Additional noise based on an laser scanner error model is added to the point clouds. The resulting LDA and SVM classifiers are then used to classify geometric primitives in simulated and real laser scanned point clouds. Compared to other approaches, where all known features are used for classification, we explicitly compare novel against known geometric features to prove their effectiveness.

Citation: BibTeX

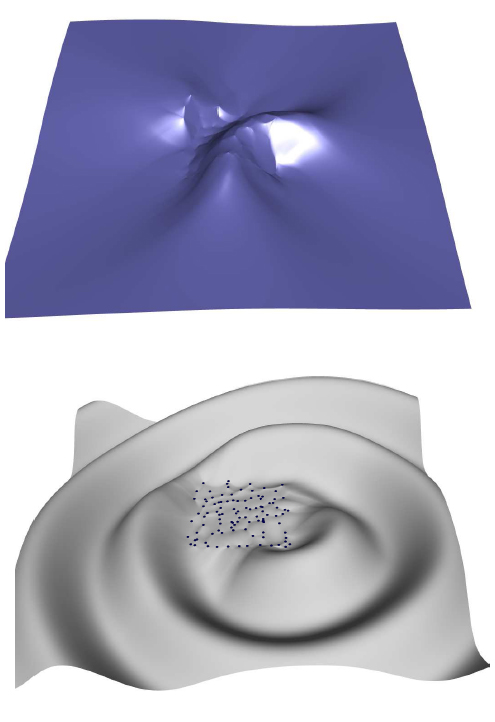

On-line CAD reconstruction with accumulated means of local geometric properties

Denker, Hamann, Umlauf

Reconstruction of hand-held laser scanner

data is used in industry primarily for reverse

engineering. Traditionally, scanning and

reconstruction are separate steps. The

operator of the laser scanner h as no feedback from

the reconstruction results. On-line reconstruction of

the CAD geometry allows for such an immediate

feedback.

We propose a method for on-line segmentation and reconstruction of CAD geometry from a stream of point data based on means that are updated on-line. These means are combined to define complex local geometric properties, e.g., to radii and center points of spherical regions. Using means of local scores, planar, cylindrical, and spherical segments are detected and extended robustly with region growing. For the on-line computation of the means we use so-called accumulated means. They allow for on-line insertion and removal of values and merging of means. Our results show that this approach can be performed on-line and is robust to noise. We demonstrate that our method reconstructs spherical, cylindrical, and planar segments on real scan data containing typical errors caused by hand-held laser scanners.

Available formats: pdfWe propose a method for on-line segmentation and reconstruction of CAD geometry from a stream of point data based on means that are updated on-line. These means are combined to define complex local geometric properties, e.g., to radii and center points of spherical regions. Using means of local scores, planar, cylindrical, and spherical segments are detected and extended robustly with region growing. For the on-line computation of the means we use so-called accumulated means. They allow for on-line insertion and removal of values and merging of means. Our results show that this approach can be performed on-line and is robust to noise. We demonstrate that our method reconstructs spherical, cylindrical, and planar segments on real scan data containing typical errors caused by hand-held laser scanners.

Citation: BibTeX

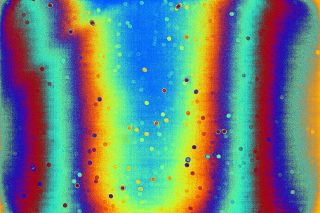

Radiometric calibration of digital cameras using Gaussian processes

Schall, Grunwald, Umlauf, Franz

In: SPIE, 2015.

Digital cameras are subject to physical,

electronic and optic effects that result in errors and

noise in the image. These effects include for example

a temperature dependent dark current, read noise,

optical vignetting or different sensitivities of

individual pixels. The task of a radiometric

calibration is to reduce these errors in the image and

thus improve the quality of the overall application.

In this work we present an algorithm for radiometric

calibration based on Gaussian processes. Gaussian

processes are a regression method widely used in

machine learning that is particularly useful in our

context. Then Gaussian process regression is used to

learn a temperature and exposure time dependent

mapping from observed gray-scale values to true light

intensities for each pixel.

Regression models based on the characteristics of single pixels suffer from excessively high runtime and thus are unsuitable for many practical applications. In contrast, a single regression model for an entire image with high spatial resolution leads to a low quality radiometric calibration, which also limits its practical use. The proposed algorithm is predicated on a partitioning of the pixels such that each pixel partition can be represented by one single regression model without quality loss. Partitioning is done by extracting features from the characteristic of each pixel and using them for lexicographic sorting. Splitting the sorted data into partitions with equal size yields the final partitions, each of which is represented by the partition centers. An individual Gaussian process regression and model selection is done for each partition. Calibration is performed by interpolating the gray-scale value of each pixel with the regression model of the respective partition. The experimental comparison of the proposed approach to classical flat field calibration shows a consistently higher reconstruction quality for the same overall number of calibration frames.

Regression models based on the characteristics of single pixels suffer from excessively high runtime and thus are unsuitable for many practical applications. In contrast, a single regression model for an entire image with high spatial resolution leads to a low quality radiometric calibration, which also limits its practical use. The proposed algorithm is predicated on a partitioning of the pixels such that each pixel partition can be represented by one single regression model without quality loss. Partitioning is done by extracting features from the characteristic of each pixel and using them for lexicographic sorting. Splitting the sorted data into partitions with equal size yields the final partitions, each of which is represented by the partition centers. An individual Gaussian process regression and model selection is done for each partition. Calibration is performed by interpolating the gray-scale value of each pixel with the regression model of the respective partition. The experimental comparison of the proposed approach to classical flat field calibration shows a consistently higher reconstruction quality for the same overall number of calibration frames.

Available formats: pdf

Citation: BibTeX

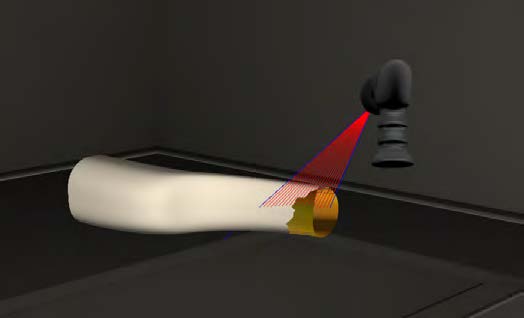

A Virtual-Reality 3d-Laser-Scan Simulation

A Virtual-Reality 3d-Laser-Scan SimulationDanhof, Schneider, Laube, Umlauf

In: BW-CAR Symposium on Information and Communication Systems (SInCom) 2, pp. 68, 2015.

We present a

3d-laser-scan simulation in virtual

reality for creating synthetic scans of CAD models.

Consisting of the virtual reality

head-mounted display Oculus Rift

and the motion controller

Razer Hydra our system can

be used like common hand-held

3d laser scanners. It

supports scanning of triangular

meshes as well as b-spline

tensor product surfaces based on

high performance ray-casting

algorithms. While point clouds of known

scanning simulations are missing the man-made

structure, our approach

overcomes this problem by

imitating real scanning scenarios.

Calculation speed, interactivity

and the resulting realistic point

clouds are the benefits of this

system.

Available formats: pdf

Citation: BibTeX

Pixel-wise Hybrid Image Registration on Wood Decors

Grunwald, Müller, Schall, Laube, Umlauf, Franz

In: BW-CAR Symposium on Information and Communication Systems (SInCom) 2, pp. 24, 2015.

The detection of

differences between images of a

printed reference and a reprinted wood decor often

requires an initial image registration

step. Depending on the

digitization method, the reprint will be displaced and

rotated with respect to the reference. The aim of

registration is to match the images as precisely as

possible. In our approach, images are first matched

globally by extracting feature

points from both images and

finding corresponding point pairs using the RANSAC

algorithm. From these correspondences,

we compute a global projective

transformation between both

images. In order to get

a pixel-wise registration, we

train a learning machine

on the point correspondences found by

RANSAC. The learning algorithm (in our case

Gaussian process regression) is

used to non-linearly interpolate

between the feature points

which results in a high

precision mage registration method

on wood decors.

Available formats: pdf

Citation: BibTeX

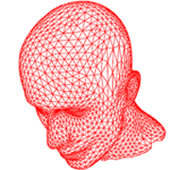

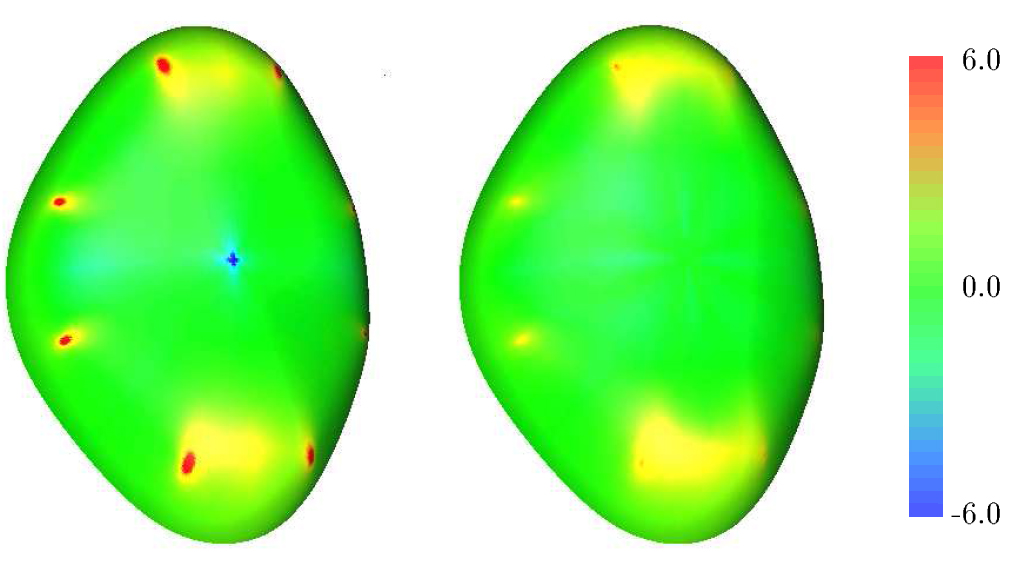

Merging multiple 3d face reconstructions

Thießen, Laube, Franz, Umlauf

In: Symposium on Information and Communication Systems, 2014.

In this paper we present a method to merge

multiple 3D face reconstructions into one common

reconstruction of higher quality. The individual

three-dimensional face reconstructions are computed by

a multi-camera stereo-matching system from different

perspectives. Using 4-Points Congruent Sets and

Iterative Closest Point the individual reconstructions

are registered. Then, the registered reconstructions

are merged based on point distance and reconstruction

tenacity. To optimize the parameters in the merging

step a kernel-based point cloud filter is used.

Finally, this filter is applied to smooth the merged

reconstruction. With this approach we are able to fill

holes in the individual reconstruction and improve the

overall visual quality.

Available formats: pdf

Citation: BibTeX

Learning geometric primitives in point clouds

Caputo, Denker, Franz, Laube, Umlauf

In: Symposium on Geometry Processing 2014, EuroGraphics, extended Abstract.

Primitive recognition in 3D point clouds

is an important aspect in reverse engineering. We

propose a method for primitive recognition based on

machine learning approaches. The machine learning

approaches used for the classification are linear

discriminant analysis (LDA) and multi-class support

vector machines (SVM). For the classification process

local geometric properties (features) of the point

cloud are computed based on point relations, normals,

and principal curvatures. For the training phase point

clouds are generated using a simulation of a laser

scanning device based on ray tracing with an error

model. The classification rates of novel, curvature

based geometric features are compared to known

geometric features to prove the effectiveness of the

approach.

Available formats: pdf

Citation: BibTeX

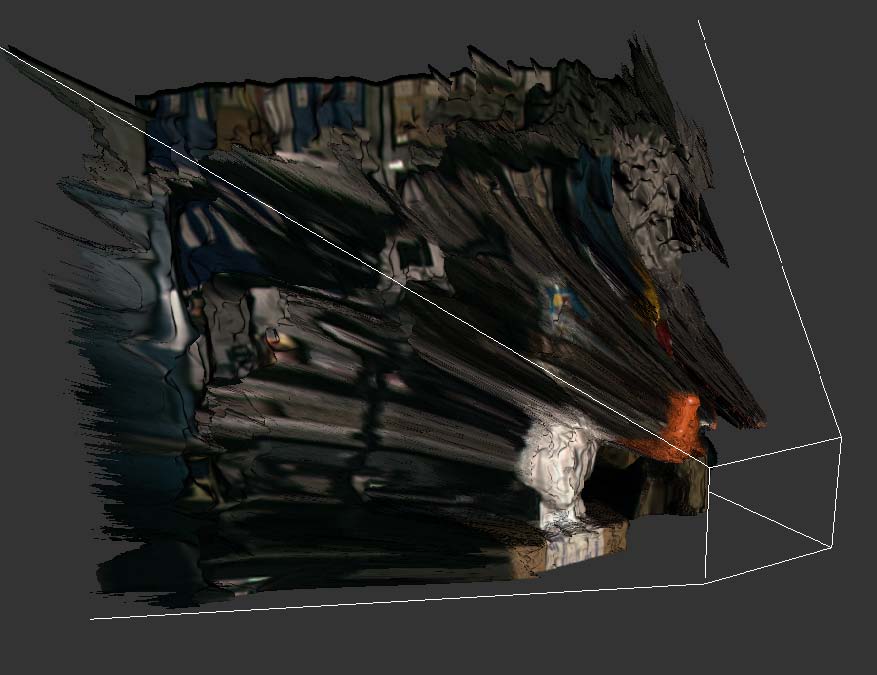

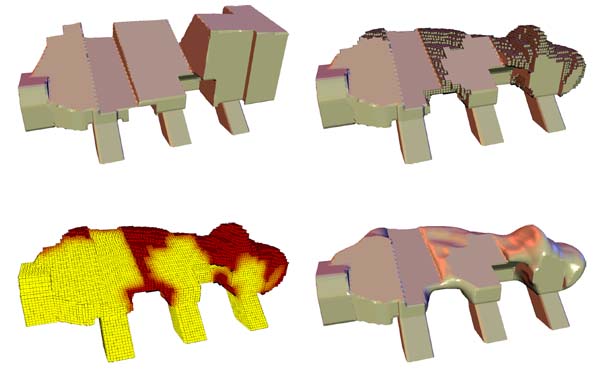

On-line reconstruction of CAD geometry

Denker, Hagel, Raible, Umlauf, Hamann

In: International Conference on 3D Vision 2013, IEEE, 151-158.

In reverse engineering and

computer-aided design (CAD) applications point cloud

data is usually manually scanned, reconstructed, and

post-processed in separated steps. When point cloud

data resulting from a scanning process do not satisfy

certain necessary reconstruction requirements, one

must perform scanning again to enable proper

reconstruction. On-line reconstruction of 3d geometry

allows one to generate and update a CAD reconstruction

online during the scanning process with an hand-held

laser scanner. Thus, regions where the scanned data is

insufficient for the reconstruction are detected on

the fly to allow an immediate correction and

improvement of the scanned data. This enables the

operator to focus on critical regions in the scanned

data to improve the reconstruction quality.

In reverse engineering and

computer-aided design (CAD) applications point cloud

data is usually manually scanned, reconstructed, and

post-processed in separated steps. When point cloud

data resulting from a scanning process do not satisfy

certain necessary reconstruction requirements, one

must perform scanning again to enable proper

reconstruction. On-line reconstruction of 3d geometry

allows one to generate and update a CAD reconstruction

online during the scanning process with an hand-held

laser scanner. Thus, regions where the scanned data is

insufficient for the reconstruction are detected on

the fly to allow an immediate correction and

improvement of the scanned data. This enables the

operator to focus on critical regions in the scanned

data to improve the reconstruction quality.We present an on-line segmentation and on-line reconstruction of basic geometric primitives. The presented methods allow for a real-time processing of a point stream. They utilize data structures that can be updated at any time when additional data from the stream has to be processed. This data is used to complete and improve the segmentation and reconstruction during the scanning process.

Available formats: pdf

Citation: BibTeX

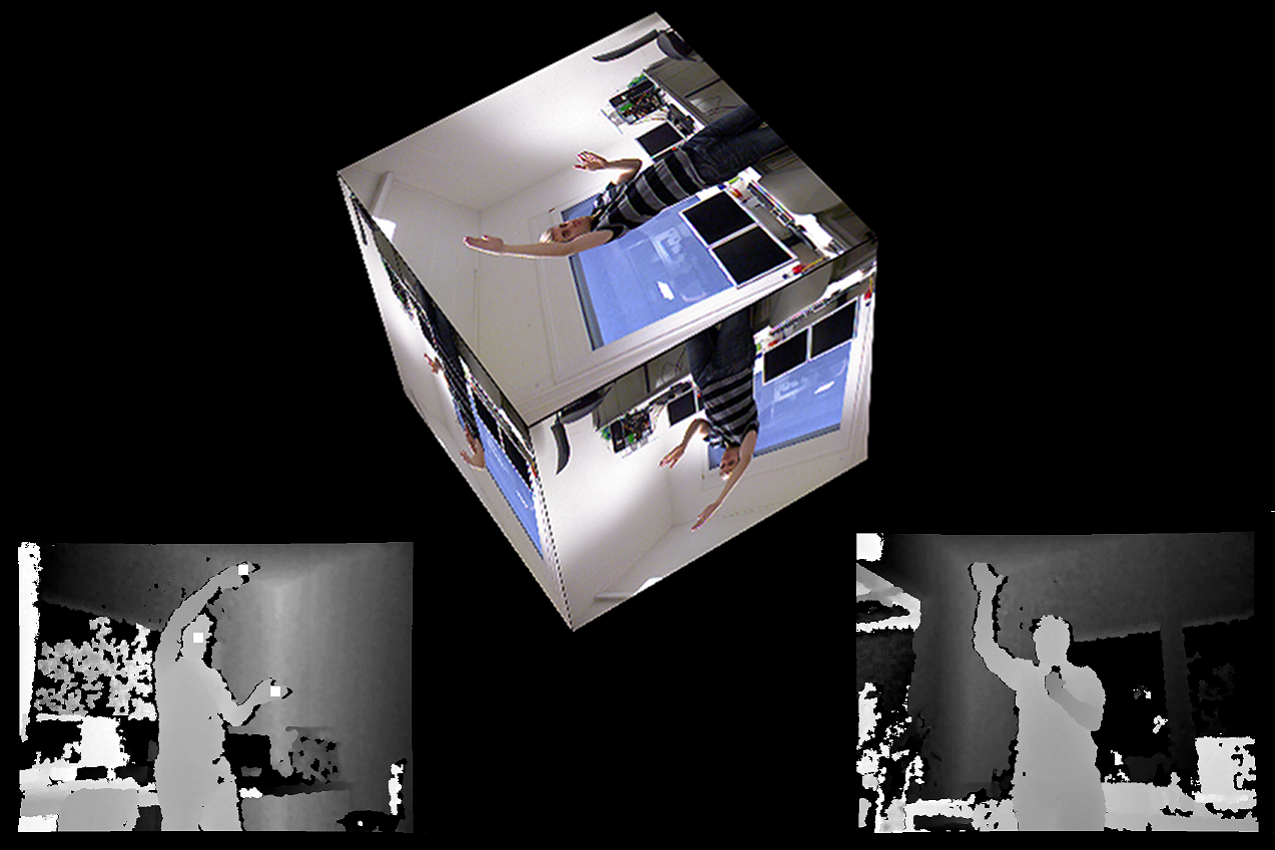

3D hand gesture recognition based on sensor fusion of commodity hardware

Caputo, Denker, Dums, Umlauf

In: Reiterer, Deussen (eds.): Mensch & Computer 2012, pp. 293-302.

With the advent of

various video game consoles and tablet devices gesture

recognition got quite popular to control computer

systems. For example touch screens allow for an

intuitive control of small 2d user interfaces with

finger gestures. For interactive manipulation of 3d

objects in a large 3d projection environment a similar

intuitive 3d interaction method is required.

In this paper, we present a dynamic 3d hand and arm gesture recognition system using commodity hardware. The input data is captured with low cost depth sensors (e.g. Microsoft Kinect) and HD color sensors (e.g. Logitech C910). Our method combines dynamic hand and arm gesture recognition based on the depth sensor with static hand gesture recognition based on the HD color sensor.

In this paper, we present a dynamic 3d hand and arm gesture recognition system using commodity hardware. The input data is captured with low cost depth sensors (e.g. Microsoft Kinect) and HD color sensors (e.g. Logitech C910). Our method combines dynamic hand and arm gesture recognition based on the depth sensor with static hand gesture recognition based on the HD color sensor.

Available formats: pdf

Citation: BibTeX

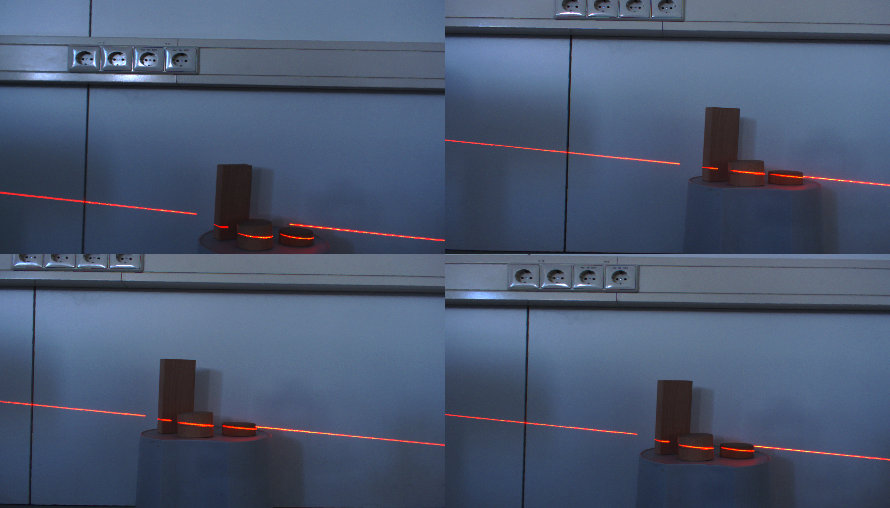

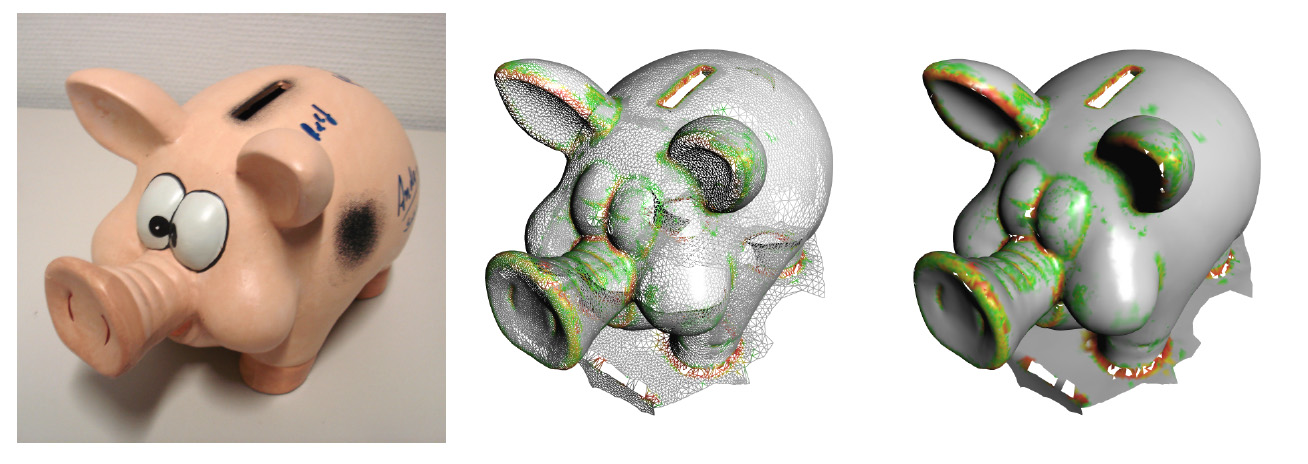

A hand-held laser scanner based on multi-camera stereo-matching

Bender, Denker, Friedrich, Hirt, Umlauf

In: Garth et al (eds.), OASICS, 17: 123-133, 2012.

Mos t

laser scanners in engineering are extended versions of

tactile measuring machines. These high

precision devices are typically very expensive and

hardware modifications are not possible without

impairing the precision of the device.

t

laser scanners in engineering are extended versions of

tactile measuring machines. These high

precision devices are typically very expensive and

hardware modifications are not possible without

impairing the precision of the device.

For these reasons we built our own laser-scanner system. It is based on a multi-camera reconstruction system developed for fast 3d face reconstructions. Based on this camera system we developed a laser-scanner using GPU accelerated stereo-matching techniques and a hand-held line-laser probe. The resulting reconstruction is solely based on the known camera positions and parameters. Thus, it is not necessary to track the position and movement of the line-laser probe. This yields an inexpensive laser-scanner system where every hardware component can be modified individually for experiments and future extensions of the system.

t

laser scanners in engineering are extended versions of

tactile measuring machines. These high

precision devices are typically very expensive and

hardware modifications are not possible without

impairing the precision of the device.

t

laser scanners in engineering are extended versions of

tactile measuring machines. These high

precision devices are typically very expensive and

hardware modifications are not possible without

impairing the precision of the device. For these reasons we built our own laser-scanner system. It is based on a multi-camera reconstruction system developed for fast 3d face reconstructions. Based on this camera system we developed a laser-scanner using GPU accelerated stereo-matching techniques and a hand-held line-laser probe. The resulting reconstruction is solely based on the known camera positions and parameters. Thus, it is not necessary to track the position and movement of the line-laser probe. This yields an inexpensive laser-scanner system where every hardware component can be modified individually for experiments and future extensions of the system.

Available formats: pdf

Citation: BibTeX

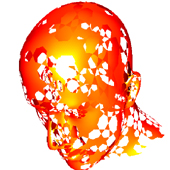

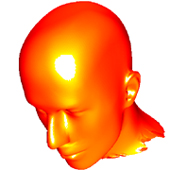

Hybrid face recognition based on real-time multi-camera stereo-matching

Hensler, Denker, Franz, Umlauf

In: G. Bebis et al. (eds.): ISVC 2011, Part II, LNCS 6930, 158-167, 2011.

Multi-camera

systems

and

GPU-based stereo-matching methods allow for a real-time

3d reconstruction of faces. We use the data generated by

such a 3d reconstruction for a hybrid face recognition

system based on color, accuracy, and depth information.

This system is structured in two subsequent phases:

geometry-based data preparation and face recognition

using wavelets and the AdaBoost algorithm. It requires

only one reference image per person. On a data base of

500 recordings, our system achieved detection rates

ranging from 95% to 97% with a false detection rate of

2% to 3%. The computation of the whole process takes

around 1.1 seconds.

Available formats: pdf

Citation: BibTeX

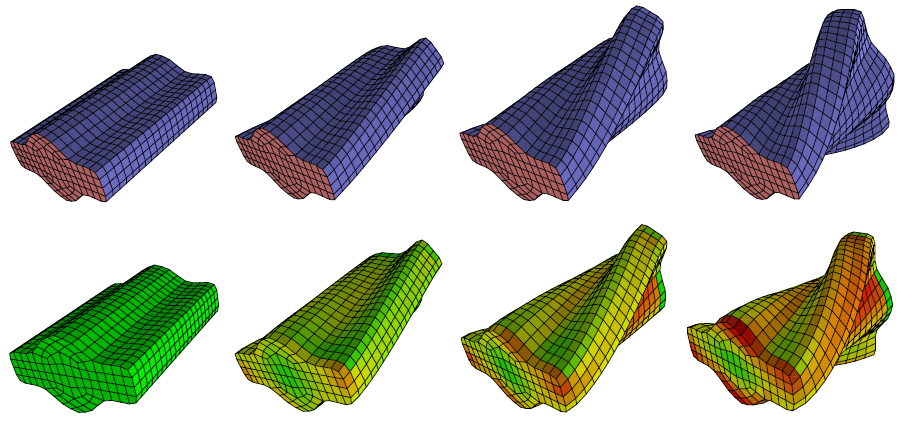

Finite element analysis for linear elastic solids based on subdivision schemes.

Burkhart, Hamann, Umlauf

In: Middel et al. (eds.), Visualization of Large and Unstructured Data Sets, OASICS, 19: 1-10, 2011.

Finite element methods

are used in various areas ranging from mechanical

engineering to computer graphics and bio-medical

applications. In engineering, a critical point is

the gap between CAD and CAE. This gap results from

different representations used for geometric design

and physical simulation.

We present two different approaches for using subdivision solids as the only representation for modeling, simulation and visualization. This has the advantage that no data must be converted between the CAD and CAE phases. The first approach is based on an adaptive and feature-preserving tetrahedral subdivision scheme. The second approach is based on Catmull-Clark subdivision solids.

We present two different approaches for using subdivision solids as the only representation for modeling, simulation and visualization. This has the advantage that no data must be converted between the CAD and CAE phases. The first approach is based on an adaptive and feature-preserving tetrahedral subdivision scheme. The second approach is based on Catmull-Clark subdivision solids.

Available formats: pdf

Citation: BibTeX

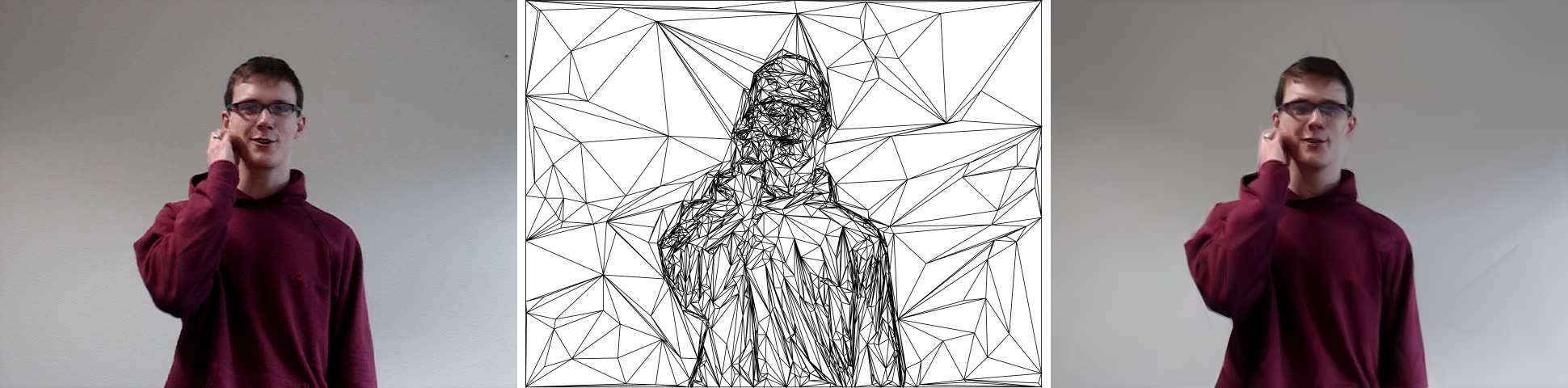

An accurate real-time multi-camera matching on the GPU for 3d reconstruction.

Denker, Umlauf

Journal of WSCG, 19: 9-16, 2011.

Using multi-ca

mera

matching techniques for 3d reconstruction there is

usually the trade-off between the quality

of

the

computed

depth

map

and

the

speed

of

the

computations.

Whereas

high

quality matching methods take several seconds to several

minutes to compute a depth map for one set of

images, real-time methods achieve only low quality

results. In this paper we present a multi-camera

matching method that runs in real-time

and yields high resolution depth maps.

mera

matching techniques for 3d reconstruction there is

usually the trade-off between the quality

of

the

computed

depth

map

and

the

speed

of

the

computations.

Whereas

high

quality matching methods take several seconds to several

minutes to compute a depth map for one set of

images, real-time methods achieve only low quality

results. In this paper we present a multi-camera

matching method that runs in real-time

and yields high resolution depth maps.Our method is based on a novel multi-level combination of normalized cross correlation, deformed matching windows based on the multi-level depth map information, and sub-pixel precise disparity maps. The whole process is implemented completely on the GPU. With this approach we can process four 0.7 megapixel images in 129 milliseconds to a full resolution 3d depth map. Because our technique is tailored for the recognition of non-technical shapes, our target application is face recognition.

Available formats: pdf

Citation: BibTeX

Survey on benchmarks for a GPU based multi camera stereo matching algorithm.

Denker, Umlauf

In: Middel et al. (eds.), Visualization of Large and Unstructured Data Sets, OASICS, 19: 20-26, 2011.

Stereo matching

algorithms and multi camera reconstruction algorithms

are usually compared using benchmarks. These

benchmarks compare the quality of the resulting depth

map or reconstructed surface mesh. We describe the

differences between several known stereo and

multi-view stereo benchmarks and their various

datasets. Also the modifications that are necessary to

use our own GPU based multi camera stereo matching

algorithm with the data from these benchmarks are

discussed.

Available formats: pdf

Citation: BibTeX

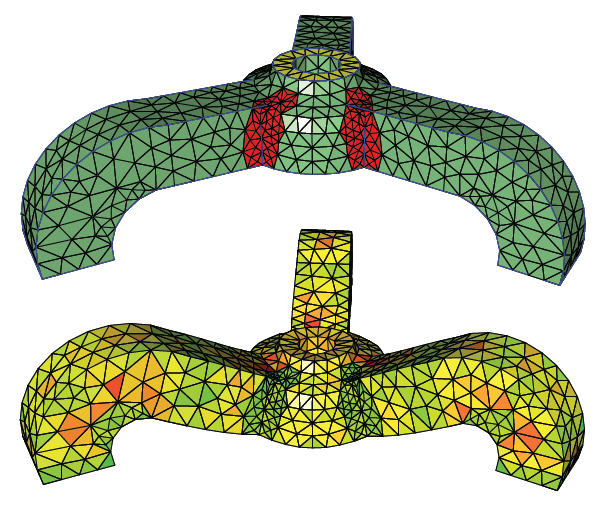

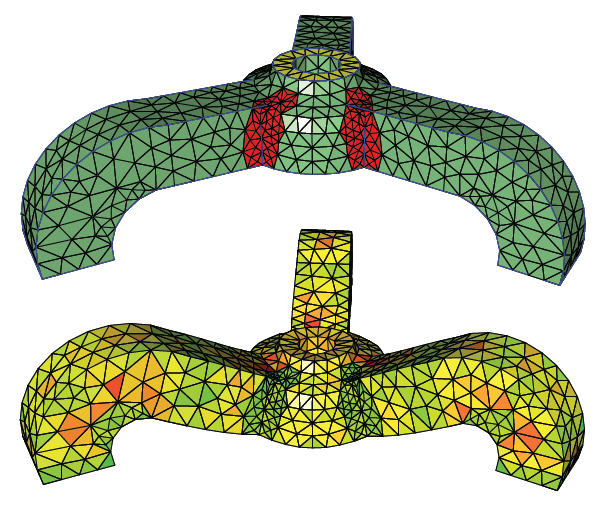

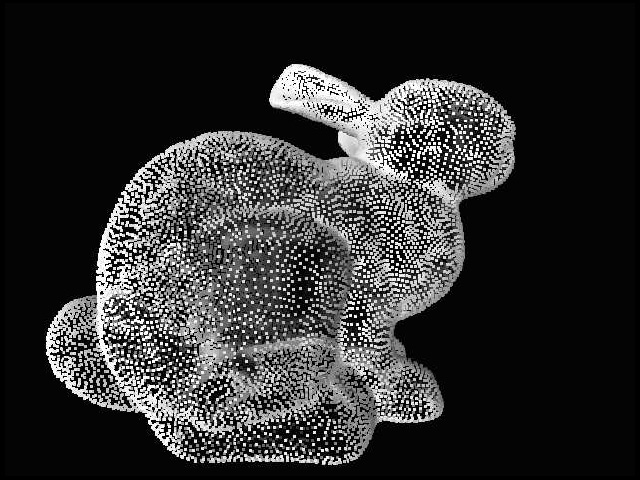

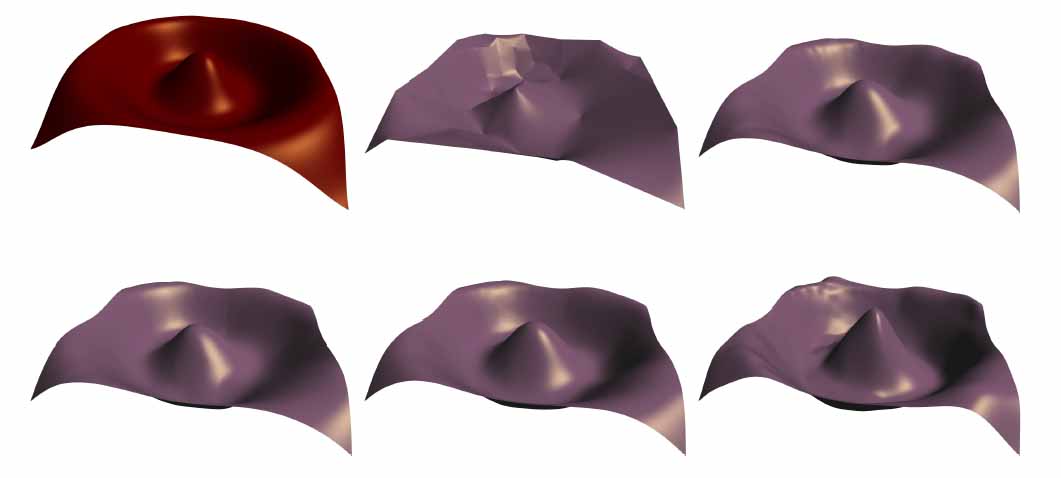

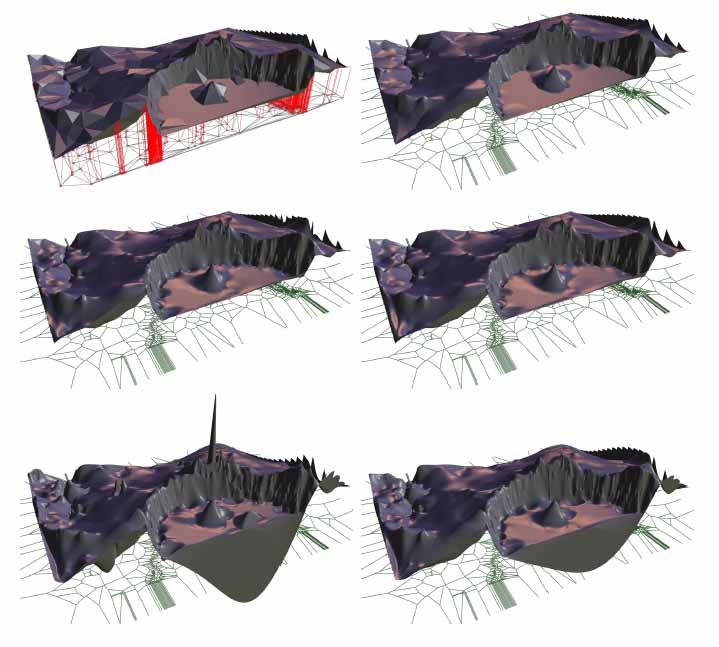

Real-time triangulation of point streams.

Denker, Lehner, Umlauf

Engineering with Computers, 27:67-80, 2011.

Hand-held laser scanners are

commonly used in industry for reverse

engineering and quality measurements. In this process, it is

difficult for the human operator to scan the target object

completely and uniformly. Therefore, an interactive triangulation

of the scanned points can

assist the operator in this task.

I n this paper we describe

the technical and implementational details of our real-time

triangulation approach for point streams, presented

at the 17th International Meshing Roundtable. Our method

computes a triangulation of the point stream generated by

the laser scanner online, i.e., the data points are added to

the triangulation as they are received from the scanner.

Multiple scanned areas and areas with a higher point density

result in a finer mesh and a higher accuracy. On the other hand, the

vertex density adapts to the estimated surface

curvature. To guide the operator the resulting triangulation is rendered

with a visualization of its uncertainty and the

display of an optimal scanning direction.

n this paper we describe

the technical and implementational details of our real-time

triangulation approach for point streams, presented

at the 17th International Meshing Roundtable. Our method

computes a triangulation of the point stream generated by

the laser scanner online, i.e., the data points are added to

the triangulation as they are received from the scanner.

Multiple scanned areas and areas with a higher point density

result in a finer mesh and a higher accuracy. On the other hand, the

vertex density adapts to the estimated surface

curvature. To guide the operator the resulting triangulation is rendered

with a visualization of its uncertainty and the

display of an optimal scanning direction.

Available formats: pdfI

n this paper we describe

the technical and implementational details of our real-time

triangulation approach for point streams, presented

at the 17th International Meshing Roundtable. Our method

computes a triangulation of the point stream generated by

the laser scanner online, i.e., the data points are added to

the triangulation as they are received from the scanner.

Multiple scanned areas and areas with a higher point density

result in a finer mesh and a higher accuracy. On the other hand, the

vertex density adapts to the estimated surface

curvature. To guide the operator the resulting triangulation is rendered

with a visualization of its uncertainty and the

display of an optimal scanning direction.

n this paper we describe

the technical and implementational details of our real-time

triangulation approach for point streams, presented

at the 17th International Meshing Roundtable. Our method

computes a triangulation of the point stream generated by

the laser scanner online, i.e., the data points are added to

the triangulation as they are received from the scanner.

Multiple scanned areas and areas with a higher point density

result in a finer mesh and a higher accuracy. On the other hand, the

vertex density adapts to the estimated surface

curvature. To guide the operator the resulting triangulation is rendered

with a visualization of its uncertainty and the

display of an optimal scanning direction.Citation: BibTeX

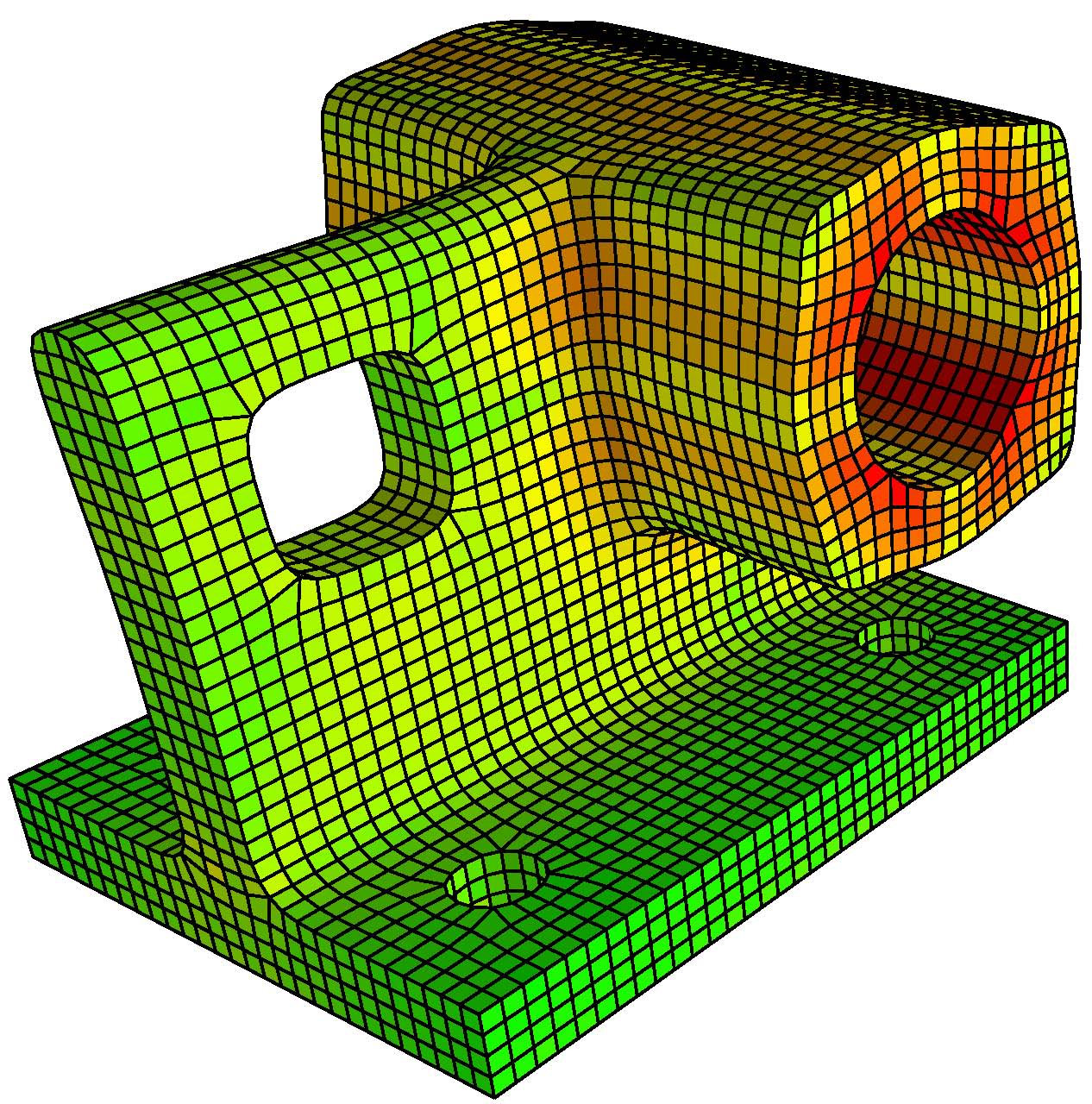

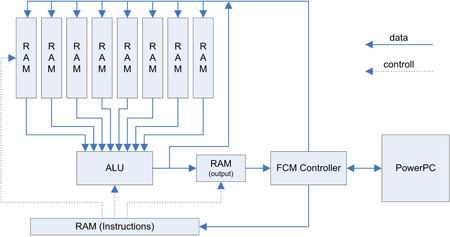

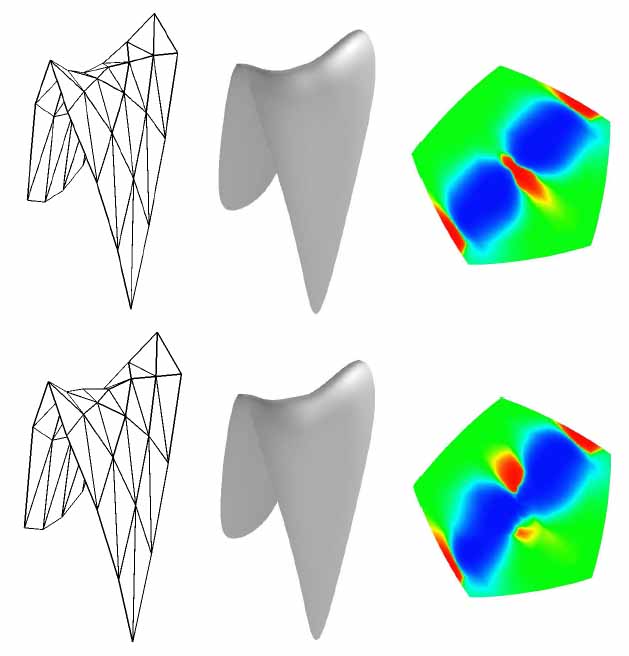

Iso-geometric analysis based on Catmull-Clark subdivision solids.

Burkhart, Hamann, Umlauf

Computer Graphics Forum, 29(5): 1575-1584, 2010.

We

present a volumetric iso-geometric finite element

analysis based on Catmull-Clark solids. This

concept allows one to use the same representation for

the modeling, the physical simulation,

and the visualization, which

optimizes the design process and narrows the gap

between CAD and CAE. In our method the

boundary of the solid model is a Catmull-Clark surface

with optional corners and creases to

support the modeling phase. The

crucial point in the simulation phase is the need to

perform efficient integration for the elements. We

propose a method similar to the standard subdivision

surface evaluation technique, such

that numerical quadrature can be used.

Experiments show that our approach converges faster than methods based on tri-linear and tri-quadratic elements. However, the topological structure of Catmull-Clark elements is as simple as the structure of linear elements. Furthermore, the Catmull-Clark elements we use are C2-continuous on the boundary and in the interior except for irregular vertices and edges.

Experiments show that our approach converges faster than methods based on tri-linear and tri-quadratic elements. However, the topological structure of Catmull-Clark elements is as simple as the structure of linear elements. Furthermore, the Catmull-Clark elements we use are C2-continuous on the boundary and in the interior except for irregular vertices and edges.

Available formats: pdf

Citation: BibTeX

Adaptive tetrahedral subdivision for finite element analysis.

Burkhart, Hamann, Umlauf

Computer Graphics International, 2010.

Rea listic behavior of

deformable objects is essential for many

applications in computer graphics, engineering, or

medicine. Typical techniques are either based on

mass-spring-damper models, boundary element methods, finite

difference methods, or finite element methods. These methods

either lack accuracy or are computationally

very expensive. If accuracy is required FEM

computations use adaptive refinement, where regions with high

gradients are refined locally. The bottleneck of this

approach is still the gap between CAD and CAE

representations.

listic behavior of

deformable objects is essential for many

applications in computer graphics, engineering, or

medicine. Typical techniques are either based on

mass-spring-damper models, boundary element methods, finite

difference methods, or finite element methods. These methods

either lack accuracy or are computationally

very expensive. If accuracy is required FEM

computations use adaptive refinement, where regions with high

gradients are refined locally. The bottleneck of this

approach is still the gap between CAD and CAE

representations.

We present an approach to utilize solid subdivision for finite element simulations using an adaptive tetrahedral subdivision scheme based on sqrt(3)-subdivision for triangular meshes. The advantage of this approach is the use of the subdivision representation the modeling, the visualization and the simulation of the solid model.

listic behavior of

deformable objects is essential for many

applications in computer graphics, engineering, or

medicine. Typical techniques are either based on

mass-spring-damper models, boundary element methods, finite

difference methods, or finite element methods. These methods

either lack accuracy or are computationally

very expensive. If accuracy is required FEM

computations use adaptive refinement, where regions with high

gradients are refined locally. The bottleneck of this

approach is still the gap between CAD and CAE

representations.

listic behavior of

deformable objects is essential for many

applications in computer graphics, engineering, or

medicine. Typical techniques are either based on

mass-spring-damper models, boundary element methods, finite

difference methods, or finite element methods. These methods

either lack accuracy or are computationally

very expensive. If accuracy is required FEM

computations use adaptive refinement, where regions with high

gradients are refined locally. The bottleneck of this

approach is still the gap between CAD and CAE

representations.We present an approach to utilize solid subdivision for finite element simulations using an adaptive tetrahedral subdivision scheme based on sqrt(3)-subdivision for triangular meshes. The advantage of this approach is the use of the subdivision representation the modeling, the visualization and the simulation of the solid model.

Available formats: pdf

Citation: BibTeX

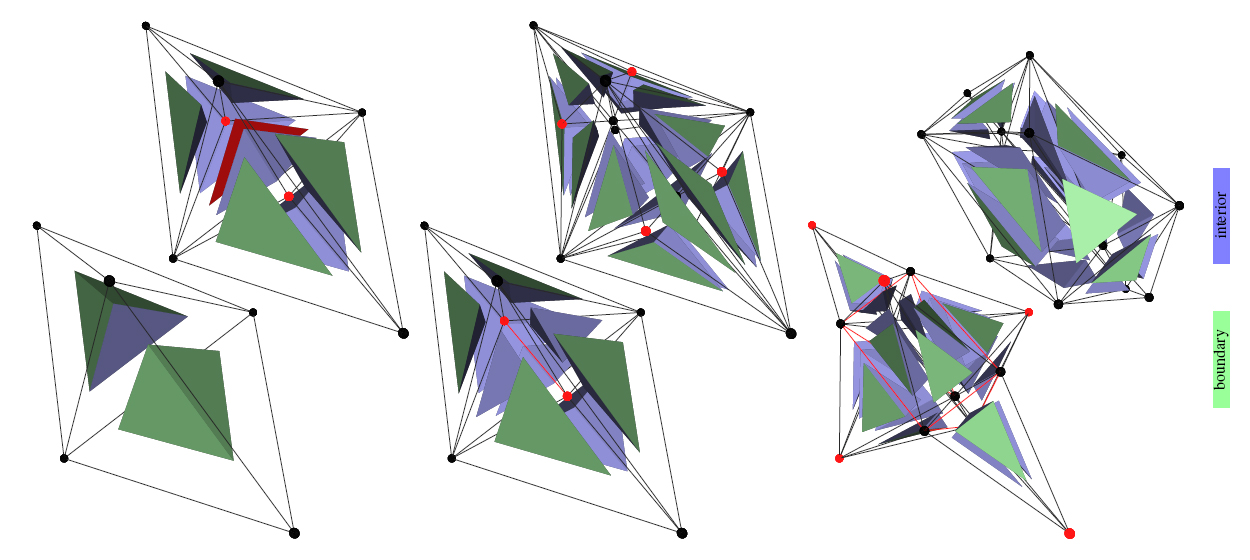

Adaptive and feature-preserving subdivision for high-quality tetrahedral meshes.

Burkhart, Hamann, Umlauf

Computer Graphics Forum, 29(1): 117-127, 2010.

We present

an adaptive subdivision scheme for unstructured

tetrahedral

meshes inspired by the sqrt(3)-subdivision scheme for

triangular meshes. Existing tetrahedral

subdivision schemes do not support adaptive refinement

and have traditionally been driven by the need to

generate smooth three-dimensional deformations of

solids. These schemes use edge bisections to subdivide

tetrahedra, which generates octahedra in addition to

tetrahedra. To split octahedra into tetrahedra one

routinely chooses a direction for the diagonals for the

subdivision step. We propose a new topology-based

refinement operator that generates only

tetrahedra and supports adaptive refinement. Our

tetrahedral subdivision algorithm is motivated by the

need to have one representation for the modeling,

the simulation and the visualization and so to

bridge the gap between CAD and CAE. Our subdivision

algorithm design emphasizes on geometric quality of the

tetrahedral meshes, local and adaptive refinement

operations, and preservation of sharp geometric features

on the boundary and in the interior of the physical

domain.

Available formats: pdf

Citation: BibTeX

Geometric properties of the adaptive Delaunay triangulation.

Bobach, Constantiniu, Steinmann, Umlauf

In: M. Dæhlen et al. (eds.), Mathematical Methods for Curves and Surfaces, Springer-Verlag, 41-54, 2010.

Recently, the Adaptive

Delaunay Tessellation (ADT) was introduced in the

context of computational mechanics as a tool to support

Voronoi-based nodal integration schemes in the finite

element method. While focusing on applications in

mechanical engineering, the former presentation lacked

rigorous proofs for the claimed geometric properties of

the ADT necessary for the computation of the nodal

integration scheme. This paper gives the missing proofs

for the three main claims which are uniqueness of the

ADT, connectedness of the ADT, and coverage of the

Voronoi tiles by adjacent ADT tiles. Furthermore, this

paper provides a critical assessment of the ADT for

arbitrary point sets.

Available formats: pdf

Citation: BibTeX

Available formats: pdf

Citation: BibTeX

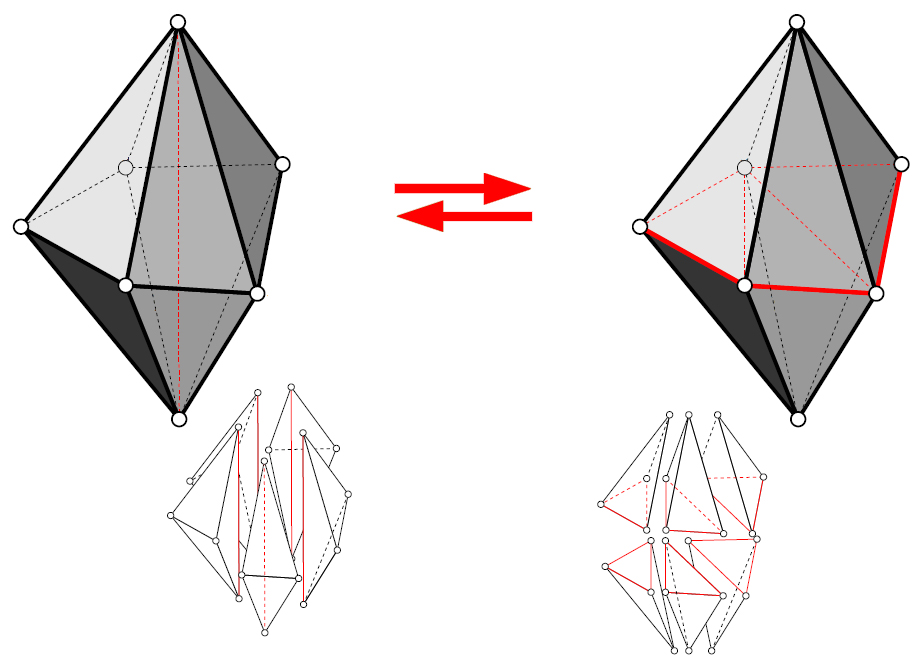

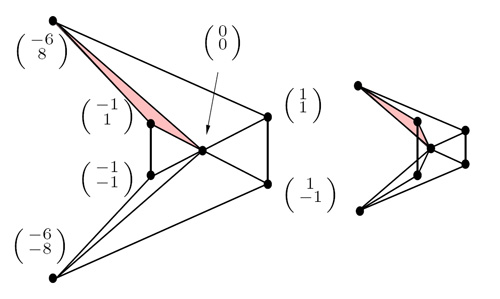

Generalized swap operations for tetrahedrizations.

Lehner, Hamann, Umlauf

In: H.Hagen (eds): Scientific Visualization - Advanced Concepts, 30-44, 2010.

Mesh

optimization of 2D and 3D triangulations is used in

multiple applications extensively. For example, mesh

optimization is crucial in the context of adaptively

discretizing geometry, typically representing the

geometrical boundary conditions of a numerical

simulation, or adaptively discretizing

the entire space over which various dependent variables

of a numerical simulation must be approximated. Together

with operations applied to the vertices the so-called

edge or face swap operations are the

building block of all optimization approaches. To speed

up the optimization or to avoid local minima of the

function measuring overall mesh quality these swaps are

combined to generalized swap operations with a less

local impact on the triangulation. Despite the fact that

these swap operations change only the connectivity of a

triangulation, it depends on the geometry of the

triangulation whether the generalized swap will generate

inconsistently oriented or degenerate simplices. Because

these are undesirable for numerical reasons, this paper

is concerned with geometric criteria that guarantee the

generalized swaps for a 3D triangulation to yield only

valid, non-degenerate triangulations.

Mesh

optimization of 2D and 3D triangulations is used in

multiple applications extensively. For example, mesh

optimization is crucial in the context of adaptively

discretizing geometry, typically representing the

geometrical boundary conditions of a numerical

simulation, or adaptively discretizing

the entire space over which various dependent variables

of a numerical simulation must be approximated. Together

with operations applied to the vertices the so-called

edge or face swap operations are the

building block of all optimization approaches. To speed

up the optimization or to avoid local minima of the

function measuring overall mesh quality these swaps are

combined to generalized swap operations with a less

local impact on the triangulation. Despite the fact that

these swap operations change only the connectivity of a

triangulation, it depends on the geometry of the

triangulation whether the generalized swap will generate

inconsistently oriented or degenerate simplices. Because

these are undesirable for numerical reasons, this paper

is concerned with geometric criteria that guarantee the

generalized swaps for a 3D triangulation to yield only

valid, non-degenerate triangulations.Natural neighbor extrapolation using ghost points.

Bobach, Farin, Hansford, Umlauf

Computer Aided-Design, 41(5): 350-365, 2009.

Among locally supported

scattered data schemes, natural neighbor interpolation

has some unique features that makes it interesting for a

range of applications. However, its restriction to the

convex hull of the data sites is a limitation that has

not yet been satisfyingly overcome. We use this setting

to discuss some aspects of scattered data extrapolation

in general, compare existing methods, and propose a

framework for the extrapolation of natural

neighbor interpolants on the basis of dynamic ghost

points.

Available formats: pdf

Citation: BibTeX

Online triangulation of laser-scan data.

Denker, Lehner, Umlauf

In: R. Garimella (ed.), Proceedings of the 17th International Meshing Roundtable, Springer-Verlag, 415-432, 2008.

Hand-held laser scanners

are used massively in industry for reverse engineering

and quality measurements. In this process, it is

difficult for the human operator to cover the scanned

object completely and uniformly. Therefore, an

interactive triangulation of the scanned surface

points can assist the human operator in this task.

Our method computes a triangulation of the point stream generated by the laser scanner online, i.e., the data points are added to the triangulation as they are received from the scanner. Multiple scanned areas and areas with a higher point density result in a finer mesh and a higher accuracy. On the other hand, the vertex density adapts to the estimated surface curvature. To assist the human operator the resulting triangulation is rendered with a visualization of its faithfulness. Additionally, our triangulation method allows for a level-of-detail representation to reduce the mesh complexity for fast rendering on low-cost graphics hardware.

Our method computes a triangulation of the point stream generated by the laser scanner online, i.e., the data points are added to the triangulation as they are received from the scanner. Multiple scanned areas and areas with a higher point density result in a finer mesh and a higher accuracy. On the other hand, the vertex density adapts to the estimated surface curvature. To assist the human operator the resulting triangulation is rendered with a visualization of its faithfulness. Additionally, our triangulation method allows for a level-of-detail representation to reduce the mesh complexity for fast rendering on low-cost graphics hardware.

Available formats: pdf

Citation: BibTeX

Videos: avi

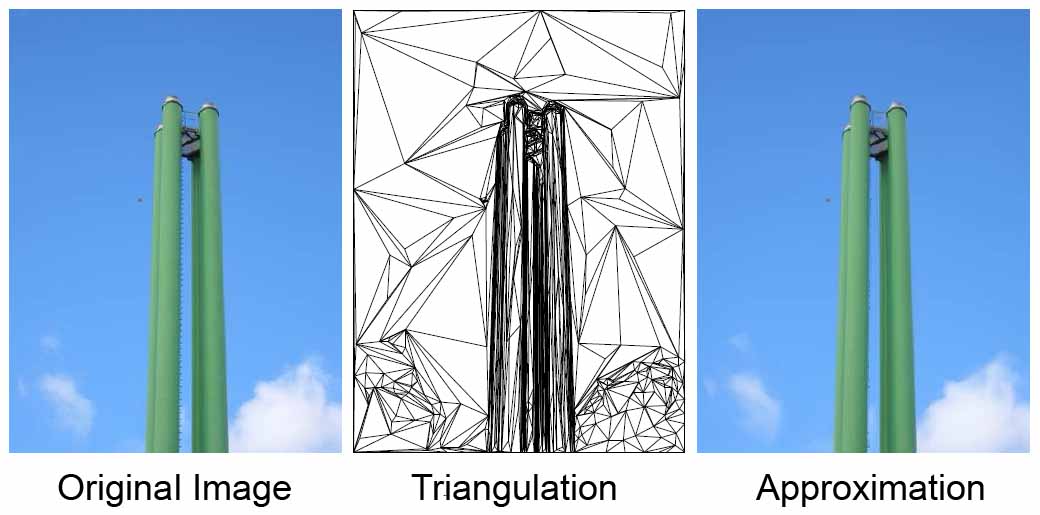

Video compression using data-dependent triangulations.

Lehner, Umlauf, Hamann

In: Y. Xiao, E. ten Thij (eds.), Computer Graphics and Visualization '08, 244-248, 2008.

Best short-paper award.

We present a method for

compression of video clips using data-dependent

triangulations. This technique utilizes the time

coherence of a video to transfer information from one

frame to the next, reducing the computation time for the

compression. The results of this method are compared to

those obtained with MJPEG and MPEG-2.

Available formats: pdf

Citation: BibTeX

Videos: avi

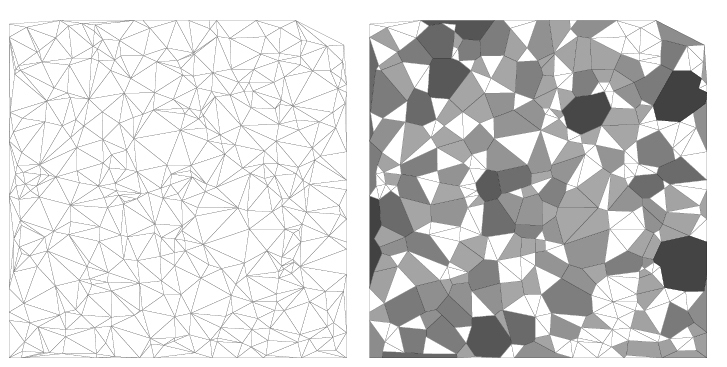

The adaptive Delaunay tessellation: A neighborhood covering meshing technique.

Constantiniu, Steinmann, Bobach, Farin, Umlauf

Computational Mechanics, 42(5), 655-669, 2008.

In

this paper we propose an

unstructured hybrid tessellation of a scattered point

set that minimally covers the proximal space around

each point. The mesh is automatically obtained in a

bounded period of time by transforming an initial

Delaunay tessellation. Novel types of polygonal

interpolants are used for interpolation applications

and geometric quantities of the elements make them

also useful for discretization schemes. The approach

proves to be superior to classical Delaunay one in a

finite element

context.

Available formats: pdf

Citation: BibTeX

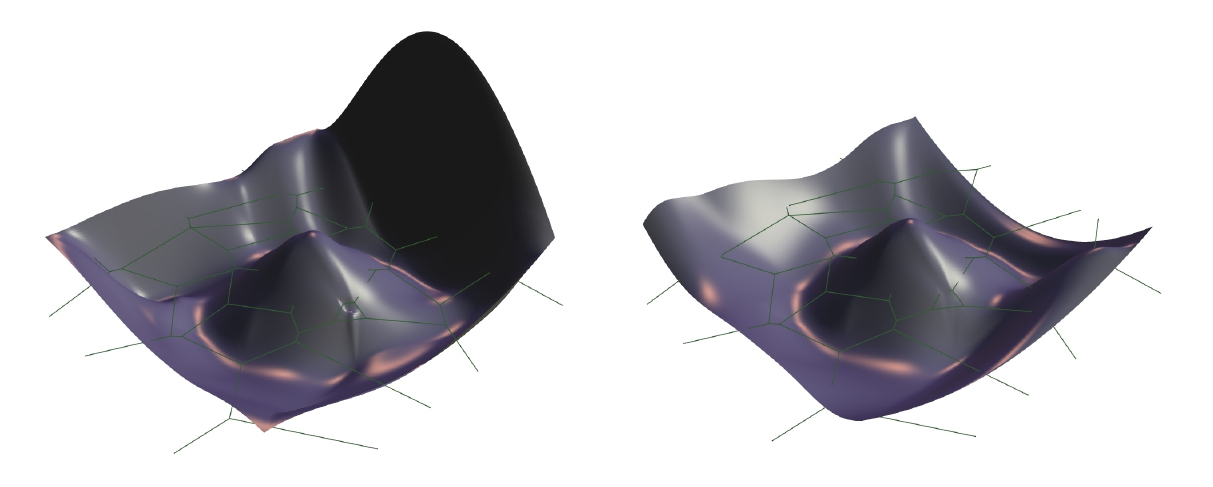

Local energy-optimizing subdivision

algorithms.

Local energy-optimizing subdivision

algorithms.Ginkel, Umlauf

Computer Aided Geometric Design, 25(3): 137-147, 2008.

In

this paper a method is presented to fair the limit

surface of a subdivision algorithm locally around an

extraordinary point. The dominant six eigenvalues of

the subdivision matrix have to satisfy linear and

quadratic equality- and inequality-constraints in

order to guarantee normal-continuity and bounded

curvature at the extraordinary point. All other

eigenvalues can be chosen arbitrarily within certain

intervals and therefore can be used to optimize the

shape of the subdivision surface by minimizing

quadratic energy functionals. Additionally, if the

sub- and subsub-dominant eigenvalues vary within

predefined intervals, C1-regularity

of

the

surface

and

locality

of

the

stencils

can

be

guaranteed,

although

eigenvectors

are

changed.

Available formats: pdf

Citation: BibTeX

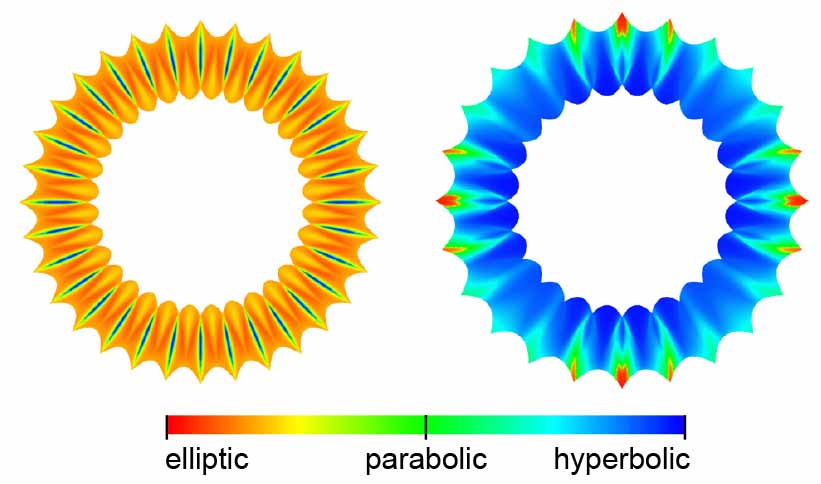

Symmetry

of shape

charts.

Symmetry

of shape

charts.Ginkel, Umlauf

Computer Aided Geometric Design, 24(3): 131-136, 2008.

For

subdivision surfaces, the so-called shape chart can be

used to characterize the curvature behavior at an

extraordinary point a priori from the initial control

net. Of late, it has been used in different approaches

to tune subdivision algorithms to handle the so-called

hybrid shapes. For this the shape charts are computed

numerically. In this paper, symmetries of shape charts

are analyzed that can be used to simplify the

computations and to reduce the computation costs

significantly.

Available formats: pdf

Citation: BibTeX

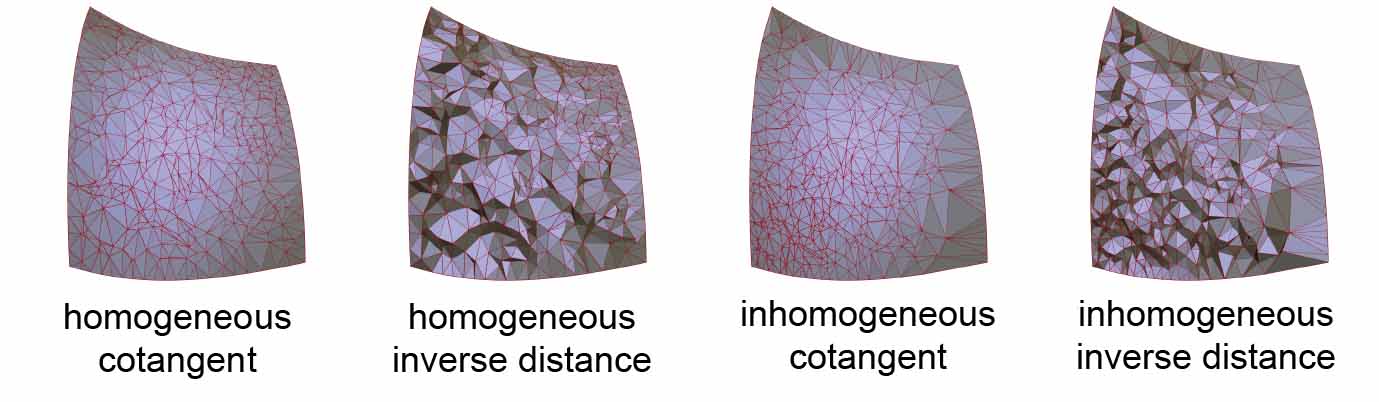

Discrete harmonic functions from local coordinates.

Bobach, Farin, Hansford, Umlauf

In: R. Martin, M. Sabin, J. Winkler (eds.), Mathematics of Surfaces XII, Springer-Verlag, 93-103, 2007.

In this work we

focus on approximations of continuous harmonic functions

by discrete harmonic functions based on the discrete

Laplacian in a triangulation of a point set. We show how

the choice of edge weights based on generalized

barycentric coordinates influences the approximation

quality of discrete harmonic functions. Furthermore, we

consider a varying point set to demonstrate that

generalized barycentric coordinates based on natural

neighbors admit discrete harmonic functions that

continuously depend on the point set.

In this work we

focus on approximations of continuous harmonic functions

by discrete harmonic functions based on the discrete

Laplacian in a triangulation of a point set. We show how

the choice of edge weights based on generalized

barycentric coordinates influences the approximation

quality of discrete harmonic functions. Furthermore, we

consider a varying point set to demonstrate that

generalized barycentric coordinates based on natural

neighbors admit discrete harmonic functions that

continuously depend on the point set.Citation: BibTeX

Natural neighbor concepts in scattered data interpolation and discrete function approximation.

Bobach, Umlauf

In: H. Hagen, M. Hering-Bertram, C. Garth (eds.), GI Lecture Notes in Informatics, Visualization of Large and Unstructured Data Sets, 23-35, 2007.

The concept of natural neighbors employs the

notion of distance to define local neighborhoods in

discrete data. Especially when querying and accessing

large scale data, it is important

to limit the amount of data that has to be processed for

an answer. Because of its implicit definition on

distances, the natural neighbor concept is extremely

well suited to provide meaningful neighborhoods in

spatial data with a scattered, inhomogeneous

distribution.

This paper revisits some unique properties of natural neighbor based methods and summarizes important findings for their successful application to scattered data interpolation, and the computation of discrete harmonic functions.

This paper revisits some unique properties of natural neighbor based methods and summarizes important findings for their successful application to scattered data interpolation, and the computation of discrete harmonic functions.

Citation: BibTeX

Analyzing a generalized Loop subdivision scheme.

Ginkel, Umlauf

Computing, 79(2-4), 353-363, 2007.

In this paper a

class subdivision schemes generalizing the algorithm of

Loop is presented. The stencils have same support as

those from the algorithm of Loop, but allow a variety of

weights. By varying the weights a class of C1

regular subdivision schemes is obtained. This class

includes the algorithm of Loop and the midpoint schemes

of order one and two for triangular nets. The proof of C1

regularity of the limit surface for arbitrary triangular

nets is provided for any choice of feasible weights.

The purpose of this generalization of the subdivision algorithm of Loop is to demonstrate the capabilities of the applied analysis technique. Since this class includes schemes that do not generalize box spline subdivision, the analysis of the characteristic map is done with a technique that does not need an explicit piecewise polynomial representation. This technique is computationally simple and can be used to analyze classes of subdivision schemes. It extends previously presented techniques based on geometric criteria.

The purpose of this generalization of the subdivision algorithm of Loop is to demonstrate the capabilities of the applied analysis technique. Since this class includes schemes that do not generalize box spline subdivision, the analysis of the characteristic map is done with a technique that does not need an explicit piecewise polynomial representation. This technique is computationally simple and can be used to analyze classes of subdivision schemes. It extends previously presented techniques based on geometric criteria.

Available formats: pdf

Citation: BibTeX

Tuning subdivision

algorithms using constrained energy minimization.

Tuning subdivision

algorithms using constrained energy minimization.Ginkel, Umlauf

In: R. Martin, M. Sabin, J. Winkler (eds.), Mathematics of Surfaces XII, Springer-Verlag, 166-176, 2007.

In this paper a

method is presented to fair the limit surface of a

subdivision algorithm around an extraordinary point. The

eigenvalues and eigenvectors of the subdivision matrix

determine the continuity and shape of the limit surface.

The dominant, sub-dominant and subsub-dominant

eigenvalues should satisfy linear and quadratic

equality- and inequality-constraints to guarantee

continuous normal and bounded curvature globally. The

remaining eigenvalues need only satisfy linear

inequality-constraints. In general, except for the

dominant eigenvalue, all eigenvalues can be used to

optimize the shape of the limit surface with our method.

Available formats: pdf

Citation: BibTeX

Normals of subdivision surfaces and their control polyhedra.

Ginkel, Peters, Umlauf

Computer Aided Geometric Design, 24(2): 112-116, 2007.

For planar spline

curves and bivariate box-spline functions, the cone of

normals of a polynomial spline piece is enclosed by the

cone of normals of its spline control polyhedron. This

note collects some concrete examples to show that this

is not true for subdivision surfaces, both at

extraordinary points and in the regular, box-spline

setting. A larger set, the cross products of families of

control net edges, has to be considered.

Available formats: pdf

Citation: BibTeX

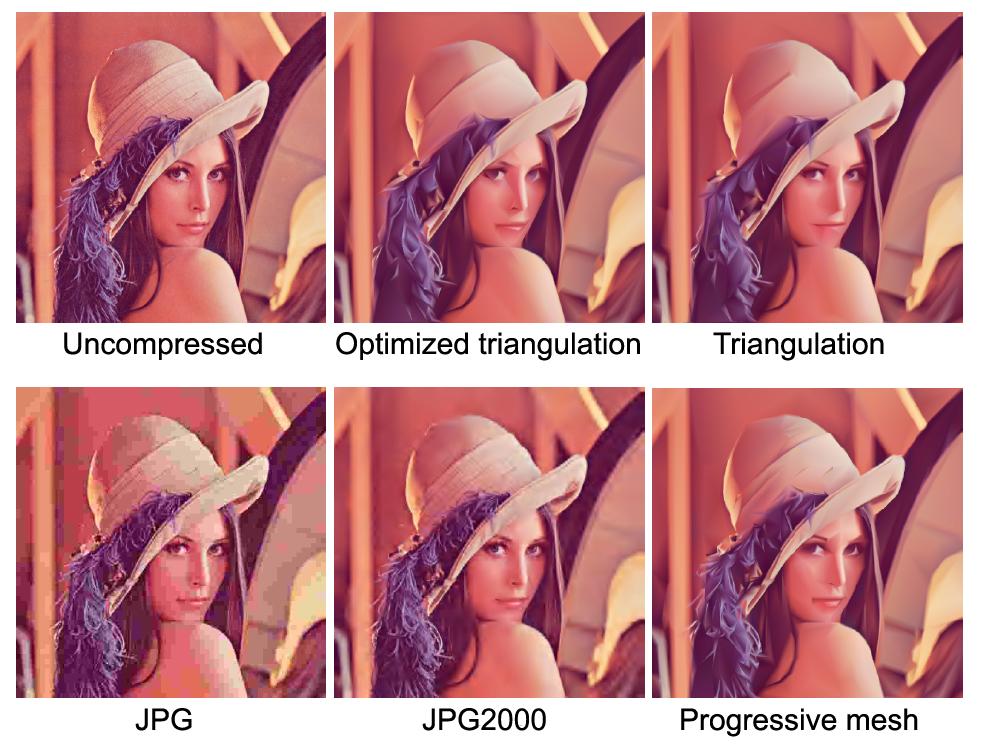

Image

compression using data-dependent triangulations.

Image

compression using data-dependent triangulations.Lehner, Umlauf, Hamann

In: G. Bebis et al. (eds.), Advances in Visual Computing, Part I, Springer-Verlag, 352-362, 2007.

We present a method

to speed up the computation of a high-quality

data-dependent triangulation approximating an image

using simulated annealing by probability distributions

guided by local approximation error and its variance.

The triangulation encodes the image, yielding

compression rates comparable to or even superior to JPEG

and JPEG2000 compression.

The specific contributions of our paper are a speed-up of the simulated annealing optimization and a comparison of our approach to other image approximation and compression methods. Furthermore, we propose an adaptive vertex insertion/removal strategy and termination criteria for the simulated annealing to achieve specified approximation error bounds.

The specific contributions of our paper are a speed-up of the simulated annealing optimization and a comparison of our approach to other image approximation and compression methods. Furthermore, we propose an adaptive vertex insertion/removal strategy and termination criteria for the simulated annealing to achieve specified approximation error bounds.

Available formats: pdf

Citation: BibTeX

Survey of techniques for data-dependent

triangulations.

Survey of techniques for data-dependent

triangulations.Lehner, Umlauf, Hamann

In: H. Hagen, M. Hering-Bertram, C. Garth (eds.), GI Lecture Notes in Informatics, Visualization of Large and Unstructured Data Sets, 178-187, 2007.

We present a survey

of different techniques to approximate a color image

using a piecewise linear interpolation induced by a

triangulation of the image domain. We also include a

detailed description of a method we designed. We give a

short overview of possible applications and extensions.

Available formats: pdf

Citation: BibTeX

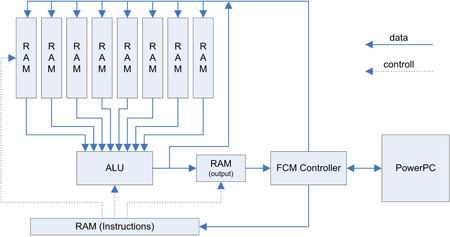

Embedded

vertex shader in FPGA.

Embedded

vertex shader in FPGA.Middendorf, Mühlbauer, Umlauf, Bobda

In: A. Rettberg et al. (eds.), Embedded System Design: Topics, Techniques and Trendes, Springer, 155-164, 2007.

Real-time 3D

visualization of objects or information becomes

increasingly important in everyday life e.g. in cellular

phones or mobile systems. Care should be taken in the

design and implementation  of 3D

rendering in such embedded devices like handheld devices

in order to meet the performance requirement, while

maintaining power consumption low. In this work, the

design and implementation of a vertex shader on a

reconfigurable hardware is presented. The main focus is

placed on the efficient hardware/software partitioning

of the vertex shader computation, in order to maximize

the performance while maintaining a high flexibility.

The resulting solution must be compatible to existing

vertex shaders in oder to allow the large amount of

existing program to be easily ported to our platform. A

prototype consisting of a PowerPC, peripherals and some

custom hardware modules is realized a on an FPGA-board.

The implementation of a point rendering shows

considerable speed up compared to a pure software

solution.

of 3D

rendering in such embedded devices like handheld devices

in order to meet the performance requirement, while

maintaining power consumption low. In this work, the

design and implementation of a vertex shader on a

reconfigurable hardware is presented. The main focus is

placed on the efficient hardware/software partitioning

of the vertex shader computation, in order to maximize

the performance while maintaining a high flexibility.

The resulting solution must be compatible to existing

vertex shaders in oder to allow the large amount of

existing program to be easily ported to our platform. A

prototype consisting of a PowerPC, peripherals and some

custom hardware modules is realized a on an FPGA-board.

The implementation of a point rendering shows

considerable speed up compared to a pure software

solution.

of 3D

rendering in such embedded devices like handheld devices

in order to meet the performance requirement, while

maintaining power consumption low. In this work, the

design and implementation of a vertex shader on a

reconfigurable hardware is presented. The main focus is

placed on the efficient hardware/software partitioning

of the vertex shader computation, in order to maximize

the performance while maintaining a high flexibility.

The resulting solution must be compatible to existing

vertex shaders in oder to allow the large amount of

existing program to be easily ported to our platform. A

prototype consisting of a PowerPC, peripherals and some

custom hardware modules is realized a on an FPGA-board.

The implementation of a point rendering shows

considerable speed up compared to a pure software

solution.

of 3D

rendering in such embedded devices like handheld devices

in order to meet the performance requirement, while

maintaining power consumption low. In this work, the

design and implementation of a vertex shader on a

reconfigurable hardware is presented. The main focus is

placed on the efficient hardware/software partitioning

of the vertex shader computation, in order to maximize

the performance while maintaining a high flexibility.

The resulting solution must be compatible to existing

vertex shaders in oder to allow the large amount of

existing program to be easily ported to our platform. A

prototype consisting of a PowerPC, peripherals and some

custom hardware modules is realized a on an FPGA-board.

The implementation of a point rendering shows

considerable speed up compared to a pure software

solution.Available formats: pdf

Comparison of Voronoi based scattered data

interpolation schemes.

Comparison of Voronoi based scattered data

interpolation schemes. Bobach, Bertram, Umlauf

In: J.J. Villanueva (ed.), Proceedings of International Conference on Visualization, Imaging and Image Processing, 342-349, 2006.

Voronoi based

interpolation employs the concept of natural neighbors

to define an interpolating function over discrete data

known at scattered sample points. In this work we review

the two main concepts for improving interpolation

continuity inside the convex hull of the sample domain

and compare four natural neighbor interpolants of C1

and C2

continuity. We give a visual presentation of all

interpolants to provide insight into their overall

behavior in addition to a comparison of their analytical

and practical properties.

Available formats: pdf

Citation: BibTeX

Issues and implementation of C1 and C2 natural neighbor

interpolation.

Issues and implementation of C1 and C2 natural neighbor

interpolation.Bobach, Bertram, Umlauf

In: G. Bebis et al. (eds.), Advances in Visual Computing, Part II, Springer-Verlag, 186-195, 2006.

Smooth local coordinates have been

proposed by Hiyoshi and Sugihara 2000 to improve the

classical Sibson’s and Laplace coordinates. These

smooth local coordinates are computed by integrating

geometric quantities over weights in the power

diagram. In this paper we describe how to

efficiently& implement the Voronoi based C2 local coordinates. The

globally C2 interpolant that Hiyoshi

and Sugihara presented in 2004 is then compared to

Sibson’s and Farin’s C1 interpolants when

applied to scattered data interpolation.

Available formats: pdf

Citation: BibTeX

Natural

neighbor

interpolation

and order of continuity.

Natural

neighbor

interpolation

and order of continuity. Bobach, Umlauf

In: H. Hagen, A. Kerren, P. Dannenmann (eds.), GI Lecture Notes in Informatics, Visualization of Large and Unstructured Data Sets, 69-86, 2006.

In this paper we

give a survey on natural neighbor based interpolation, a

class of local scattered data interpolation schemes that

define their support on natural neighbors in the Voronoi

diagram of the input data sites. We discuss the existing

work with respect to common aspects of scattered data

interpolation and focus on smoothness of the

interpolant.

Available formats: pdf

Citation: BibTeX

Controlling a subdivision

tuning method.

Controlling a subdivision

tuning method.Ginkel, Umlauf

In: A. Cohen, J.-L. Merrien, L.L. Schumaker (eds.), Curve and Surface Fitting, 170-179, 2006.

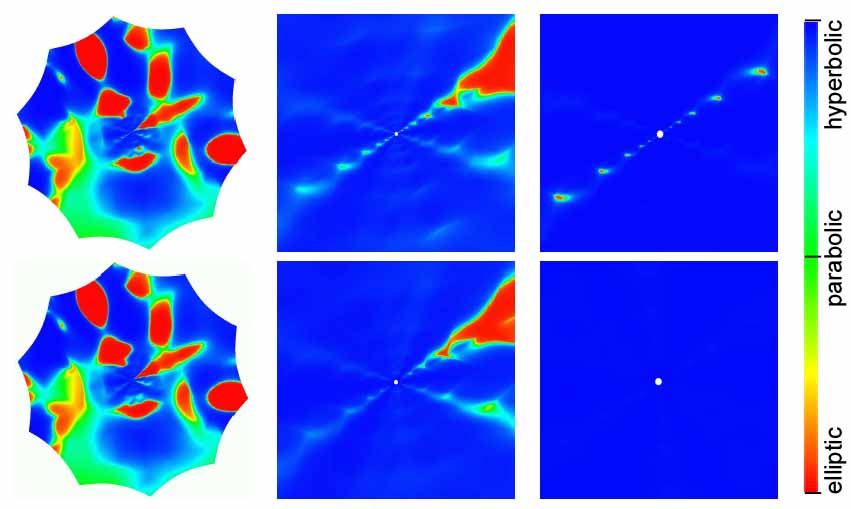

In this paper the

problem of curvature behavior around extraordinary

points of a Loop subdivision surface is addressed. For

subdivision surfaces, configurations of the initial

control net exist in which neither the elliptic nor the

hyperbolic component of the initial control net becomes

dominant. This leads to so-called hybrid shapes which

often cause visible artifacts. A solution to this

problem is to split it into two parts: first an

eigenvalue tuning to allow for bounded Gauss curvature

with arbitrary sign and, second, an eigencoefficient

tuning to avoid hybrid shapes. The techniques for

eigencoefficient tuning will now be analyzed in detail.

The analysis allows to quantify the difference between

the original and the modified surfaces. Additionally,

extensions to the eigencoefficient tuning techniques are

given to solve various problems that might be imposed by

the topology of the initial control net.

Available formats: pdf

Citation: BibTeX

Loop

subdivision with curvature control.

Loop

subdivision with curvature control. Ginkel, Umlauf

In: K. Polthier, A. Sheffer (eds.), Eurographics Symposium on Geometry Processing, Eurographics Association, 163-171, 2006.

In this paper the

problem of curvature behavior around extraordinary

points of a Loop subdivision surface is addressed. A

variant of Loop’s algorithm with small stencils is used

that generates surfaces with bounded curvature and

prescribed elliptic or hyperbolic behavior. We present

two different techniques that avoid the occurrence of

hybrid configurations, so that an elliptic or hyperbolic

shape can be guaranteed. The first technique uses a

symmetric modification of the initial control-net to

avoid hybrid shapes in the vicinity of an extraordinary

point. To keep the difference between the original and

the modified mesh as small as possible the changes are

formulated as correction stencils and spread to a finite

number of subdivision steps. The second technique is

based on local optimization in the frequency domain. It

provides more degrees of freedom and so more control

over the global shape.

Available formats: pdf

Citation: BibTeX

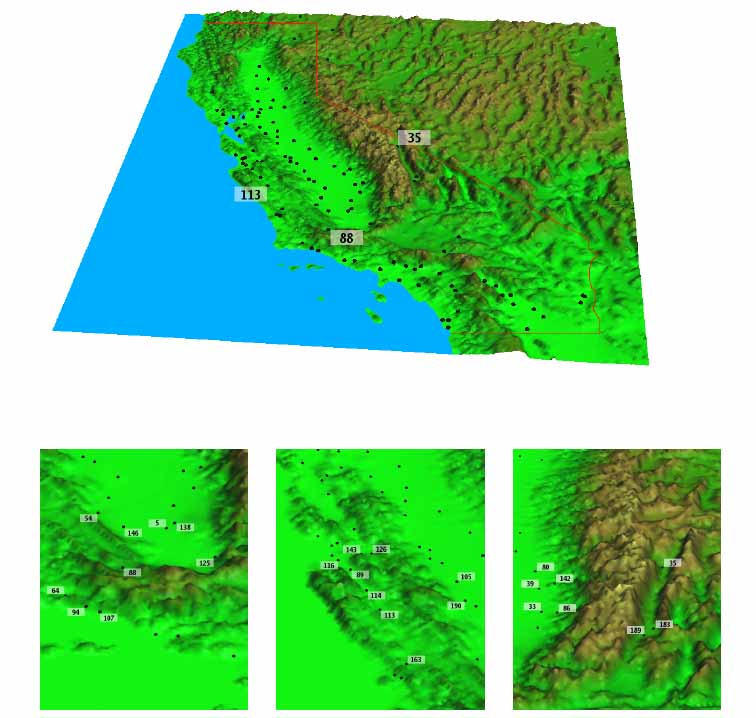

Topographic

distance

functions for interpolation of meteorological data.

Topographic

distance

functions for interpolation of meteorological data.Lehner, Umlauf, Hamann, Ustin

In: H. Hagen, A. Kerren, P. Dannenmann (eds.), GI Lecture Notes in Informatics, Visualization of Large and Unstructured Data Sets, 119-131, 2006.

The reference

evapotranspiration ET0 is an important meteorological

quantity in agriculture and water resource management.

It is usually estimated from other meteorological

quantities measured at weather stations. To estimate ET0

at an arbitrary geographical position these quantities

must be interpolated. The Center for Spatial

Technologies And Remote Sensing (CSTARS) at UC Davis

uses the DayMet approach for this task.

We discuss some inconsistencies within the DayMet approach and suggest improvements. One significant problem of DayMet is the lack of consideration of terrain topography. We define new distance functions that are elevation-dependent and show preliminary results of the comparison of the classic and the improved DayMet approach.

We discuss some inconsistencies within the DayMet approach and suggest improvements. One significant problem of DayMet is the lack of consideration of terrain topography. We define new distance functions that are elevation-dependent and show preliminary results of the comparison of the classic and the improved DayMet approach.

Available formats: pdf

Citation: BibTeX

Parametrizations for triangular Gk spline surfaces of low degree.

Prautzsch, Umlauf

ACM Transactions on Graphics, 25(4), 1281-1293, 2006.

In this paper, we

present regularly parametrized Gk

free form spline surfaces that extend box and half-box

splines over regular triangular grids. The polynomial

degree of these splines is max{4k+1; floor(3k/2 +1)}, where r \in N can be

chosen arbitrarily and determines the flexibility at extraordinary

points. The Gk

splines presented in this paper depend crucially on

low-degree (re-)parametrizations of piecewise polynomial

hole fillings. The explicit construction of such

parametrizations forms the core of this paper and we

present two classes of singular and regular

parametrizations. Also we show how to build box and

half-box spline surfaces of arbitrarily high smoothness

with holes bounded by only n patches in principle.

Available formats: pdf

Citation: BibTeX

On normals and control nets.

On normals and control nets.Ginkel, Peters, Umlauf

In: R. Martin, H. Bez, M. Sabin (eds.): The Mathematics of Surfaces XI, Springer-Verlag, 2005, 233-239.

This paper characterizes when the normals of a spline curve or spline surface lie in the more easily computed cone of the normals of the segments of the spline control net.

Available formats: pdf

Citation: BibTeX

A

nalysis

and tuning of subdivision schemes.

nalysis

and tuning of subdivision schemes.Umlauf

In: B. Jüttler (ed.), Proceedings of Spring Conference on Computer Graphics SCCG 2005, ACM Press, 33-40, 2005.

This paper surveys the

current state in analyzing and tuning of subdivision

algorithms. These two aspects of subdivision algorithms

are very much intertwined with the differential geometry

of the subdivision surface. This paper deals with the

interconnection of these different aspects of

subdivision algorithms and surfaces. The principal idea

for the analysis of a subdivision algorithm dates back

to the late 70s although the overall technique is only

well understood since the early 90s. Most subdivision

algorithms are analyzed today but the proofs involve

time consuming computations. Only recently simple proofs

for a certain class of subdivision algorithms were

developed that are based on geometric reasoning. This

allows for easier smoothness proofs for new developed or

tuned subdivision algorithms.

The analysis of the classical algorithms, such Catmull-Clark, Loop, etc., shows that the subdivision surfaces at the extraordinary points are not as smooth as the rest of the surface. It was also shown that the subdivision surfaces of these classical algorithms cannot model certain basic shapes. One way to tune a stationary subdivision algorithms to overcome this problem is to drop the stationarity while at the same time using the smoothness proof of the stationary algorithms.

The analysis of the classical algorithms, such Catmull-Clark, Loop, etc., shows that the subdivision surfaces at the extraordinary points are not as smooth as the rest of the surface. It was also shown that the subdivision surfaces of these classical algorithms cannot model certain basic shapes. One way to tune a stationary subdivision algorithms to overcome this problem is to drop the stationarity while at the same time using the smoothness proof of the stationary algorithms.

Available formats: pdf

Citation: BibTeX

Post-processing

polygonal

voxel data from numerical simulation.

Post-processing

polygonal

voxel data from numerical simulation. Bobach, Umlauf

Interner Bericht 332/04, Fachbereich Informatik, TU Kaiserslautern, 2004.

Many

applications dealing with geometry acquisition and

processing produce polygonal meshes that carry

artifacts like discretization noise. While there are

many approaches to remove the artifacts by smoothing

or filtering the mesh, they are not tailored to any

specific application demanding certain restrictive

constraints. We show how to incorporate smoothing

schemes based on the general Laplacian approximation

to process the results of flow simulation in car

manufacturing.

In the presented application setting the major restrictions come from the bounding volume of the flow simulation, the so-called installation space. In particular, clean mesh regions (without noise) should not be smoothed while at the same time the installation space must not be violated by the smoothing of the noisy mesh regions. Additionally, aliasing effects at the boundary between clean and noisy mesh regions must be prevented. To address the fact that the meshes come from flow simulation, the presented method is versatile enough to preserve their exact volume and to apply anisotropic filters using the flow information.

In the presented application setting the major restrictions come from the bounding volume of the flow simulation, the so-called installation space. In particular, clean mesh regions (without noise) should not be smoothed while at the same time the installation space must not be violated by the smoothing of the noisy mesh regions. Additionally, aliasing effects at the boundary between clean and noisy mesh regions must be prevented. To address the fact that the meshes come from flow simulation, the presented method is versatile enough to preserve their exact volume and to apply anisotropic filters using the flow information.

Available formats: pdf

Citation: BibTeX

A technique for verifying the smoothness of subdivision schemes.

Umlauf

In: M.L. Lucian, M. Neamtu (eds.), Geometric Modeling and Computing: Seattle 2003, Nashboro Press, 513-521, 2004.

In recent years,

subdivision schemes for surfaces of arbitrary topology

were developed that do not generalize box-splines

subdivision schemes. Examples for this kind of

subdivision schemes are the sqrt(3)-scheme and certain

averaging schemes for triangular and hexahedral nets. In

order to analyze the smoothness of the limit surface, it

is necessary to know if its characteristic map is

regular and injective. For box-spline based schemes this

can be done based on the explicit piecewise polynomial

representation. In this paper, a general approach is

introduced that allows analyzing the characteristic map

even if no explicit representation is available. The

proposed technique requires that the first divided

differences schemes are scalar and use convex

combinations. Then simple geometric properties of the

sub-dominant eigenvectors of the subdivision matrix can

be used to prove regularity and injectivity of the

characteristic map for any valence. This is demonstrated

for a midpoint scheme for triangular nets.

Available formats: pdf

Citation: BibTeX

Computing curvature bounds for bounded curvature subdivision.

Peters, Umlauf